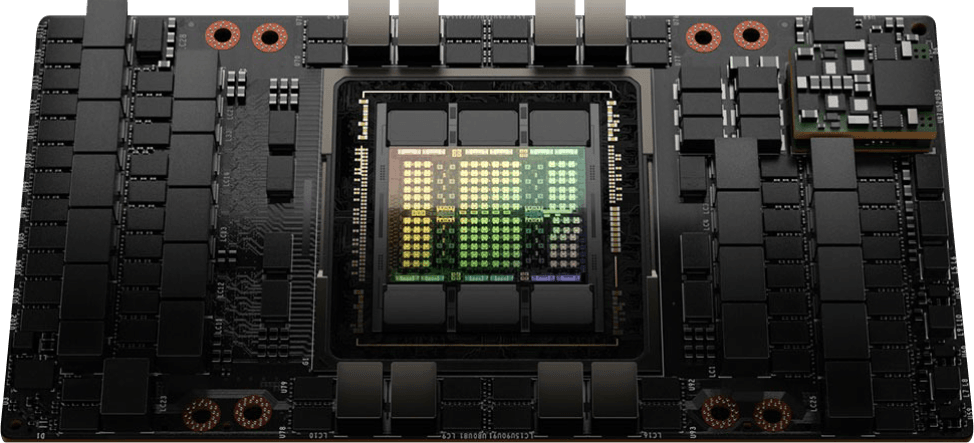

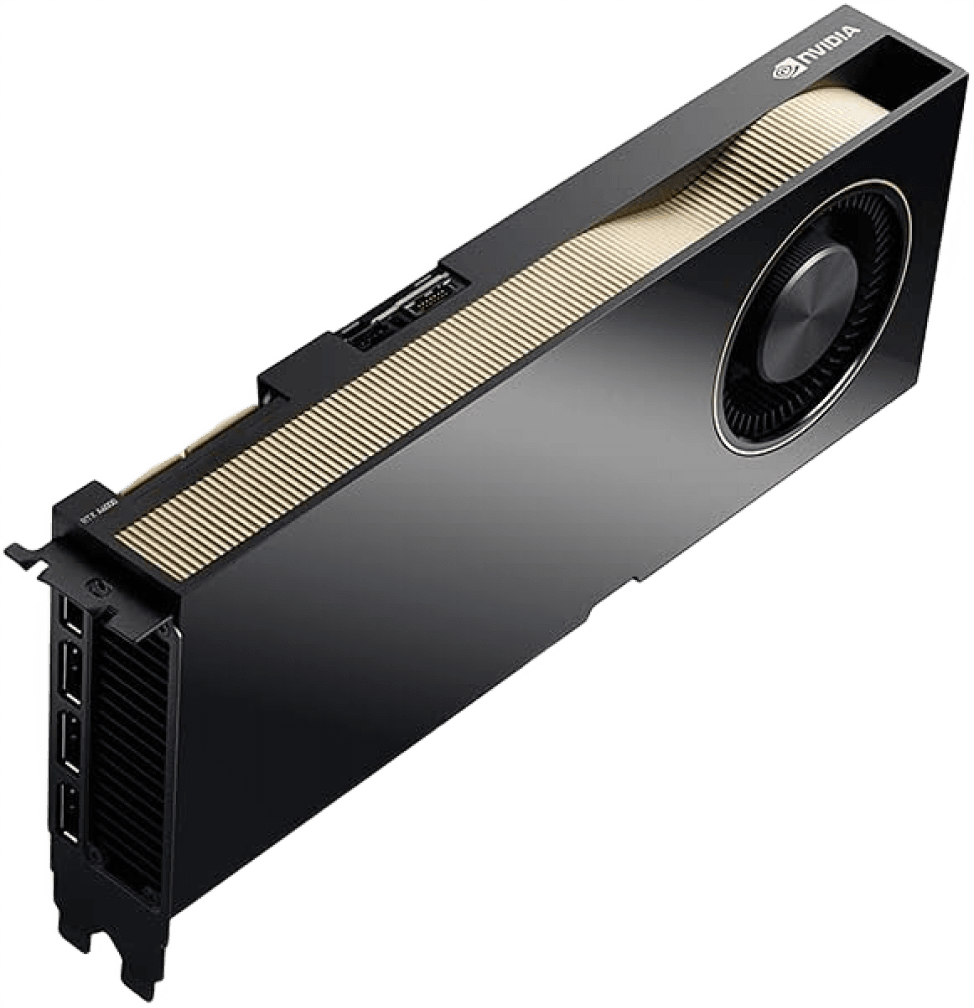

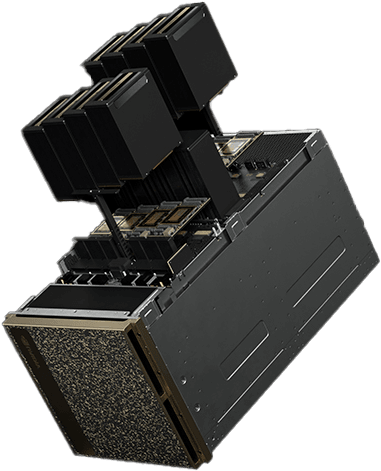

NVIDIAHGX B200

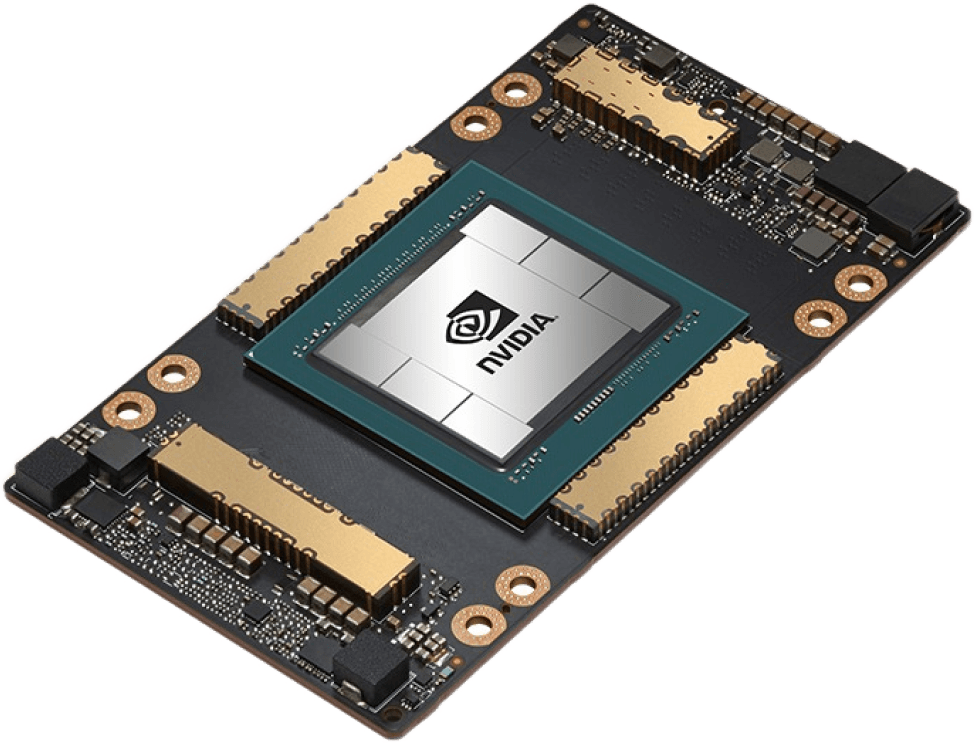

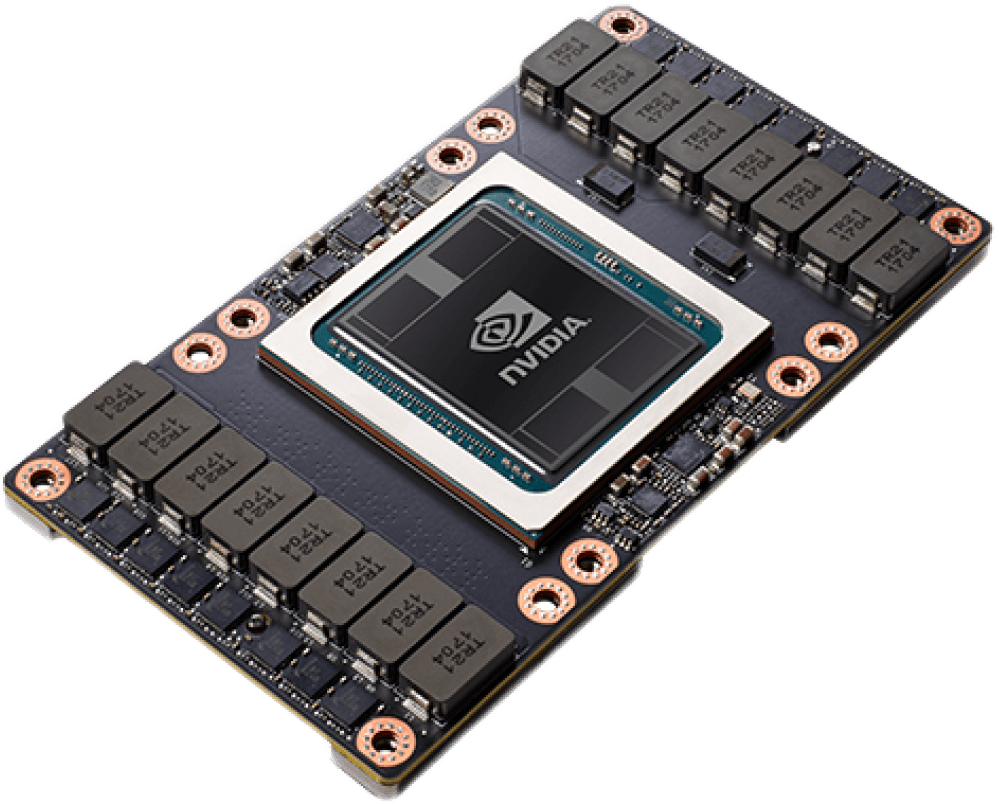

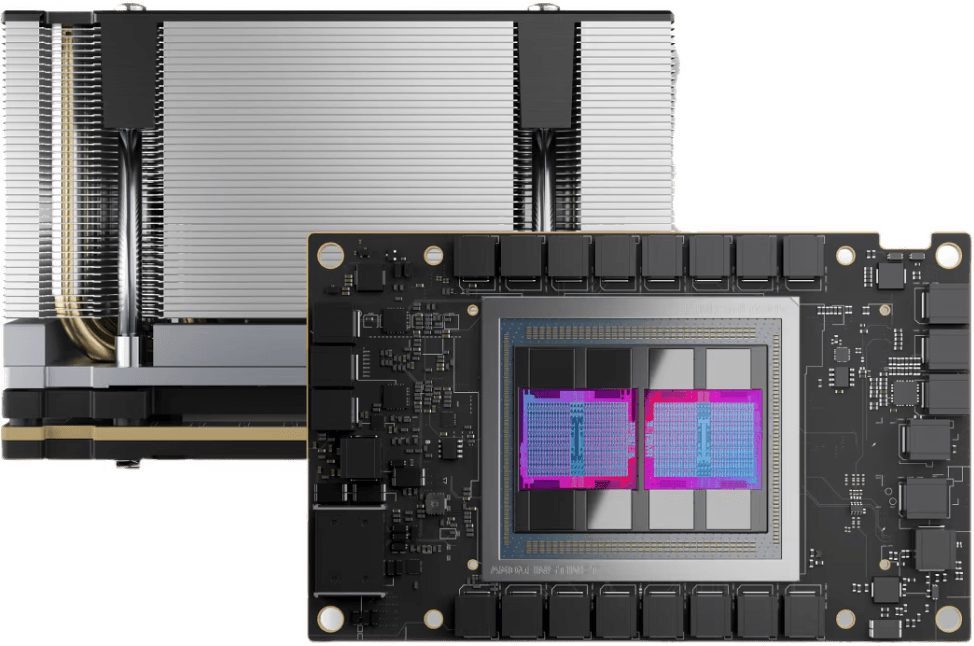

Eight NVIDIA Blackwell GPUs interconnected with fifth-generation NVIDIA® NVLink®, B200 delivers leading-edge performance, offering 3X the training performance and 15X the inference performance of previous generations.

The NVIDIA HGX B200 is perfect for a wide range of workloads

Deploying AI based workloads on CUDO Compute is easy and cost-effective. Follow our AI related tutorials.

Available at the most cost-effective pricing

Launch your AI products faster with on-demand GPUs and a global network of data center partners

Enterprise

We offer a range of solutions for enterprise customers.

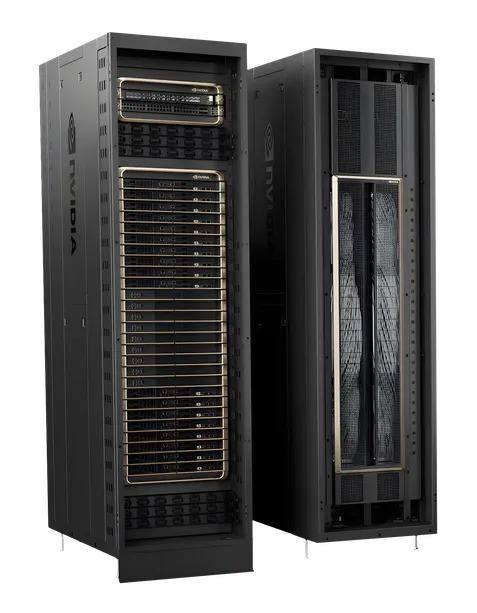

- Powerful GPU clusters

- Scalable data center colocation

- Large quantities of GPUs and hardware

- Optimise to your requirements

- Expert installation

- Scale as your demand grows

Specifications

Browse specifications for the NVIDIA HGX B200 GPU

| Starting from | Contact us for pricing |

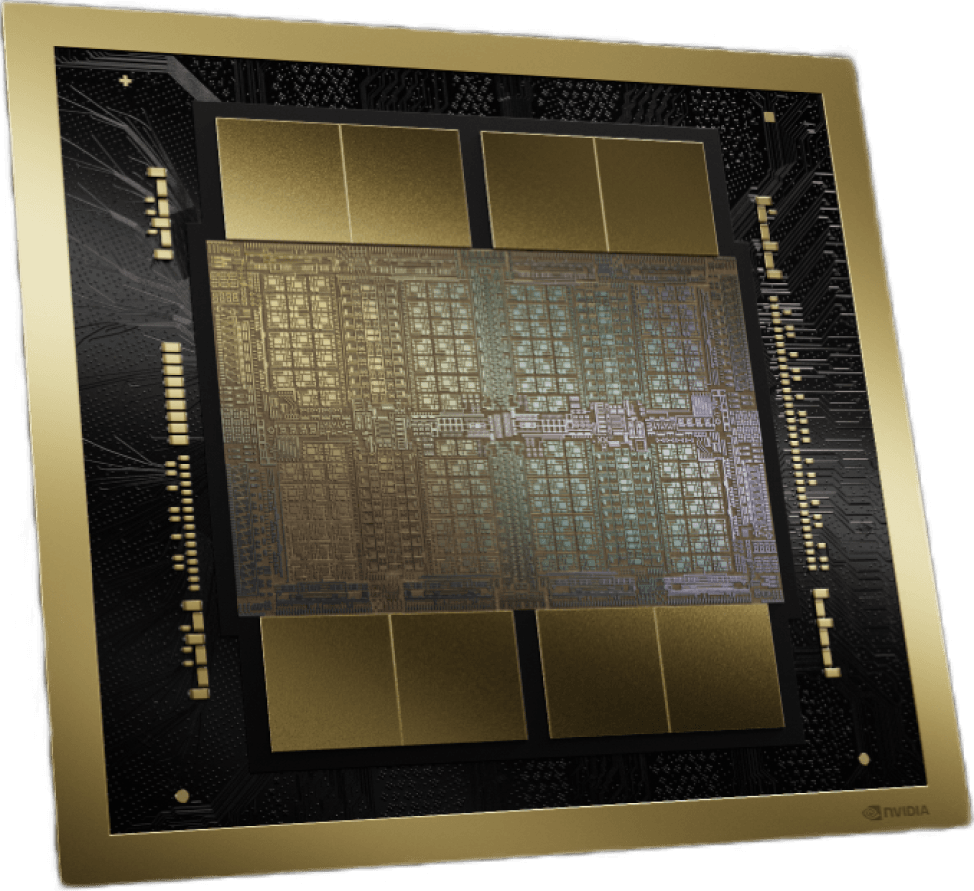

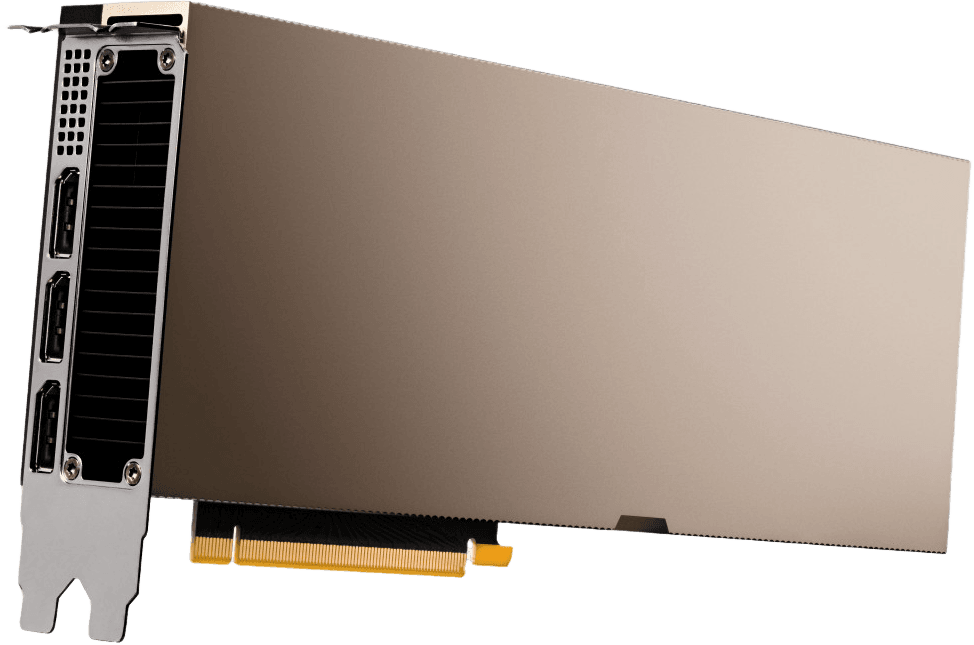

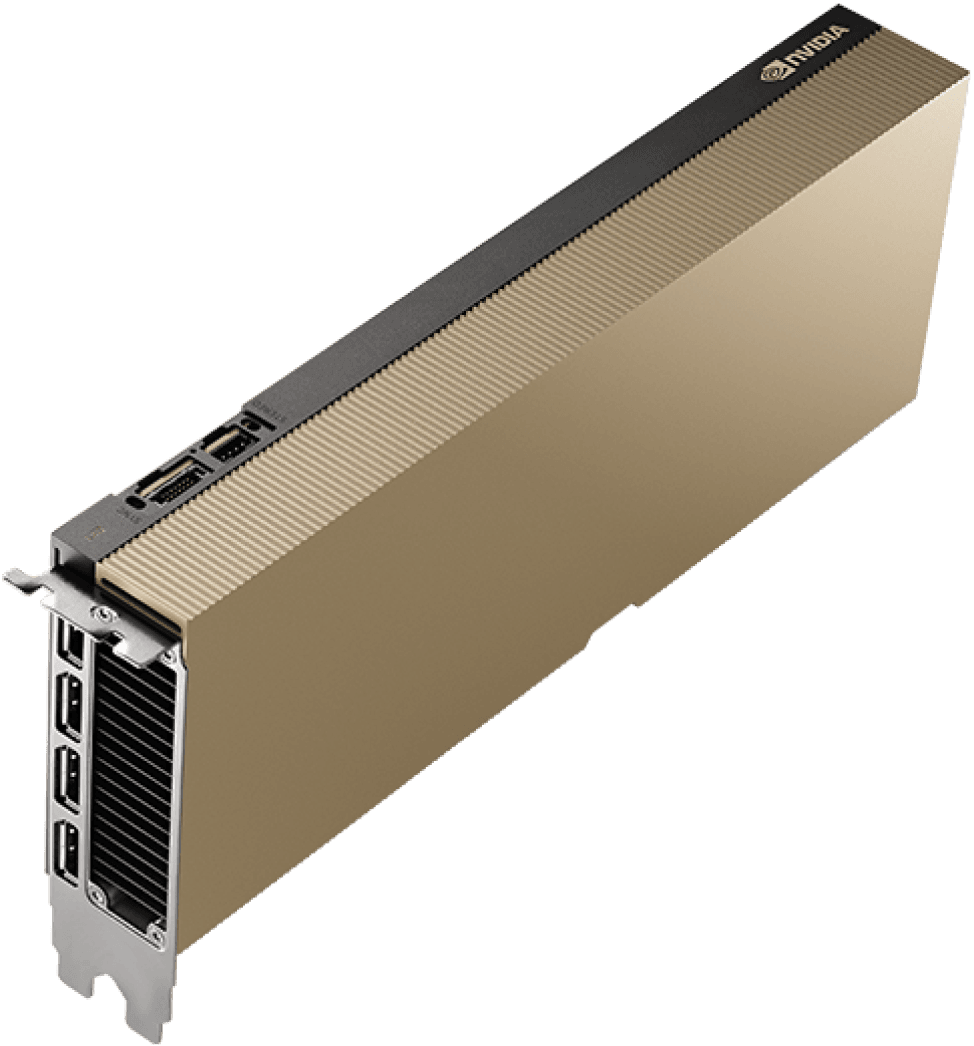

| Architecture | NVIDIA Blackwell |

| GPU | 8x NVIDIA Blackwell GPUs |

| GPU Memory | 1,440GB total GPU memory |

| Performance | 72 petaFLOPS training and 144 petaFLOPS inference |

| CPU | 2 Intel® Xeon® Platinum 8570 Processors - 112 Cores total, 2.1 GHz(Base), 4 GHz(Max Boost) |

| System Memory | Up to 4TB |

| Networking | 4x OSFP ports serving 8x single-port NVIDIA ConnectX-7 VPI | 2x dual-port QSFP112 NVIDIA BlueField-3 DPU |

| Management Network | 10Gb/s onboard NIC with RJ45, 100Gb/s dual-port ethernet NIC, Host baseboard management controller (BMC) with RJ45 |

| Storage | OS: 2x 1.9TB NVMe M.2, Internal storage: 8x 3.84TB NVMe U.2 |

| Software | NVIDIA AI Enterprise: Optimized AI Software, NVIDIA Base Command™: Orchestration, Scheduling, and Cluster Management, DGX OS / Ubuntu: Operating system |

Ideal uses cases for the NVIDIA HGX B200 GPU

Explore uses cases for the NVIDIA HGX B200 including Powerhouse of AI Performance, Real Time Large Language Model Inference, High-performance computing.

Powerhouse of AI Performance

Powered by the NVIDIA Blackwell architecture’s advancements in computing, B200 delivers 3X the training performance and 15X the inference performance of H100.

Real Time Large Language Model Inference

Token-to-token latency (TTL) = 50ms real time, first token latency (FTL) = 5s, input sequence length = 32,768, output sequence length = 1,028, 8x eight-way H100 GPUs air-cooled vs. 1x eight-way B200 air-cooled, per GPU performance comparison.

High-performance computing

From complex scientific simulations to weather forecasting and intricate financial modelling, the B200 will empower diverse organizations to accelerate high-performance computing tasks. Its unmatched memory bandwidth and processing capabilities ensure smooth operation for workloads of any scale, allowing you to achieve unmatched results faster than ever.

Browse alternative GPU solutions for your workloads

Access a wide range of performant NVIDIA and AMD GPUs to accelerate your AI, ML & HPC workloads

An NVIDIA preferred partner for compute

We're proud to be an NVIDIA preferred partner for compute, offering the latest GPUs and high-performance computing solutions.

Also trusted by our other key partners:

Talk to sales

Reserve GPUs. Access a HGX B200 GPU Cloud alongside other high performance models for as long as you need it.

Deployment & scaling. Seamless deployment alongside expert installation, ready to scale as your demands grow.

"CUDO Compute is a true pioneer in aggregating the world's cloud in a sustainable way, enabling service providers like us to integrate with ease"

Get started today or speak with an expert...

Available Mon-Fri 9am-5pm UK time