AMDMI250/300

In comparison to traditional GPUs, cloud-based AMD MI250 and MI300 GPUs offer enhanced flexibility and scalability. Developers can easily spin up or down instances to match their changing HPC processing needs without having to worry about hardware maintenance or upgrades. Cloud-based GPUs also offer lower costs and faster deployment, allowing developers to focus on their core AI/ML development work rather than managing hardware.

The AMD MI250/300 is perfect for a wide range of workloads

Deploying AI based workloads on CUDO Compute is easy and cost-effective. Follow our AI related tutorials.

Available at the most cost-effective pricing

Launch your AI products faster with on-demand GPUs and a global network of data center partners

Virtual machines

The ideal deployment strategy for AI workloads with a MI250/300.

- Up to 8 GPUs / virtual machine

- Flexible

- Network attached storage

- Private networks

- Security groups

- Images

Enterprise

We offer a range of solutions for enterprise customers.

- Powerful GPU clusters

- Scalable data center colocation

- Large quantities of GPUs and hardware

- Optimize to your requirements

- Expert installation

- Scale as your demand grows

Specifications

Browse specifications for the AMD MI250/300 GPU

| Starting from | Contact us for pricing |

| Architecture | CDNA2 | CDNA3 |

| Name | AMD Instinct™ MI250 | AMD Instinct™ MI300X |

| Family | Instinct |

| Series | MI200 Series | MI300 Series |

| Lithography | TSMC 6nm FinFET | TSMC 5nm | 6nm FinFET |

| Stream Processors | 13,312 | 19,456 |

| Compute Units | 208 | 304 |

| Peak Engine Clock | 1700 MHz | 2100 MHz |

| Peak Half Precision (FP16) Performance | 362.1 TFLOPs | 1.3 PFLOPs |

| Peak Single Precision Matrix (FP32) Performance | 90.5 TFLOPs | 163.4 TFLOPs |

| Peak Double Precision Matrix (FP64) Performance | 90.5 TFLOPs | 163.4 TFLOPs |

| Peak Single Precision (FP32) Performance | 45.3 TFLOPs | 163.4 TFLOPs |

| Peak Double Precision (FP64) Performance | 45.3 TFLOPs | 81.7 TFLOPs |

| Peak INT4 Performance | 362.1 TOPs | N/A |

| Peak INT8 Performance | 362.1 TOPs | 2.6 POPs |

| Peak bfloat16 | 362.1 TFLOPs | 1.3 PFLOPs |

| Thermal Design Power (TDP) | 500W | 560W Peak | 750W Peak |

| GPU Memory | 128 GB HBM2e | 192 GB HBM3 |

| Memory Interface | 8192-bit | 8192-bit |

| Memory Clock | 1.6 GHz | 5.2 GHz |

| Peak Memory Bandwidth | 3.2 TB/s | 5.3 TB/s |

| Memory ECC Support | Yes (Full-Chip) |

| GPU Form Factor | OAM Module |

| Bus Type | PCIe® 4.0 x16 | PCIe® 5.0 x16 |

| Infinity Fabric™ Links | 8 |

| Peak Infinity Fabric™ Link Bandwidth | 100 GB/s | 128 GB/s |

| Cooling | Passive OAM |

| Supported Technologies | AMD CDNA™ 2 Architecture, AMD ROCm™ - Ecosystem without Borders, AMD Infinity Architecture | AMD CDNA™ 3 Architecture, AMD ROCm™ - Ecosystem without Borders, AMD Infinity Architecture |

| RAS Support | Yes |

| Page Retirement | Yes |

| Page Avoidance | N/A | Yes |

| SR-IOV | N/A | Yes |

Ideal uses cases for the AMD MI250/300 GPU

Explore uses cases for the AMD MI250/300 including Enhanced natural language processing, Faster deep learning training, Real-time video analytics.

Enhanced natural language processing

Utilize the efficient architecture of AMD MI250 and MI300 GPUs to accelerate natural language processing tasks such as text classification, sentiment analysis, and machine translation. This allows developers to build more sophisticated chatbots, voice assistants, and other NLP-driven applications that can understand and respond to human language faster and more accurately.

Faster deep learning training

Train deep neural networks up to 4x faster with cloud-based AMD MI250 and MI300 GPUs compared to traditional CPUs. This enables developers to experiment with larger datasets and complex models, leading to improved model accuracy and better decision-making insights for customer applications.

Real-time video analytics

Deploy the parallel processing capabilities of AMD MI250 and MI300 GPUs and perform real-time video analytics in the cloud. Developers can analyze live video streams, detect objects, classify actions, and track movements with minimal latency, enabling applications such as smart surveillance and autonomous vehicles.

Browse alternative GPU solutions for your workloads

Access a wide range of performant NVIDIA and AMD GPUs to accelerate your AI, ML & HPC workloads

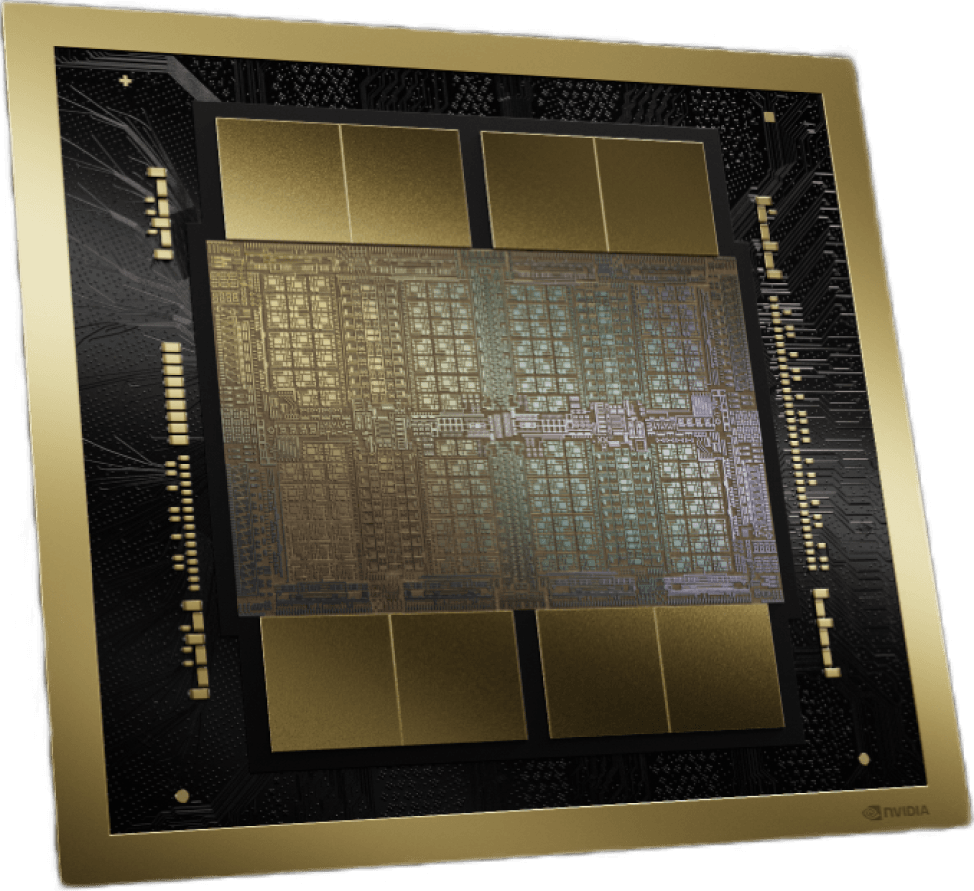

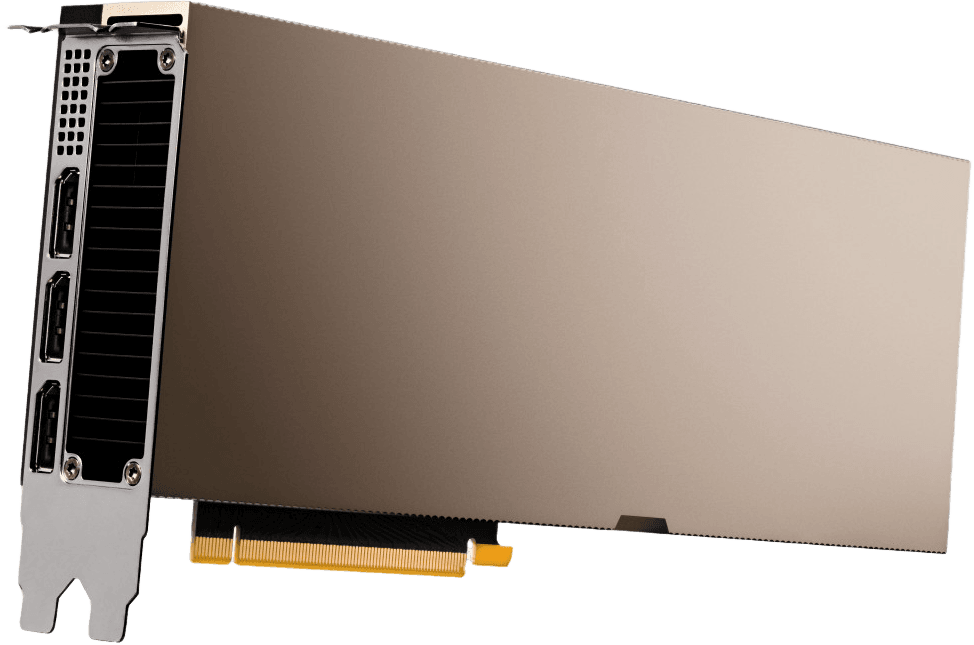

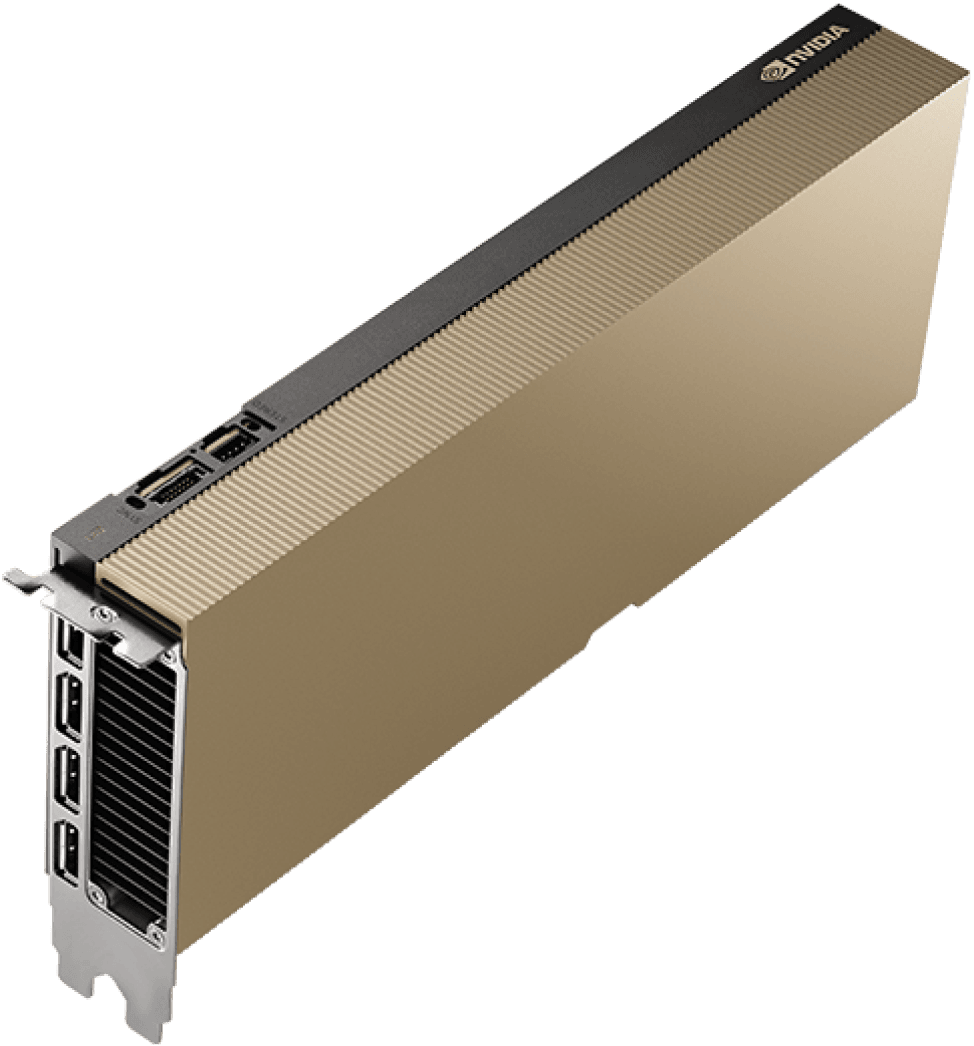

Trusted by NVIDIA. Built for you.

As a preferred partner, we offer NVIDIA’s most advanced GPUs with tested infrastructure, ready for AI, HPC and demanding workloads.

Also trusted by our other key partners:

Frequently asked questions

Talk to sales

Reserve GPUs. Access a MI250/300 GPU Cloud alongside other high performance models for as long as you need it.

Deployment & scaling. Seamless deployment alongside expert installation, ready to scale as your demands grow.

"CUDO Compute is a true pioneer in aggregating the world's cloud in a sustainable way, enabling service providers like us to integrate with ease"

VPS AI

Loading GPU resource form...

Get started today or speak with an expert...

Available Mon-Fri 9am-5pm UK time