NVIDIAH200

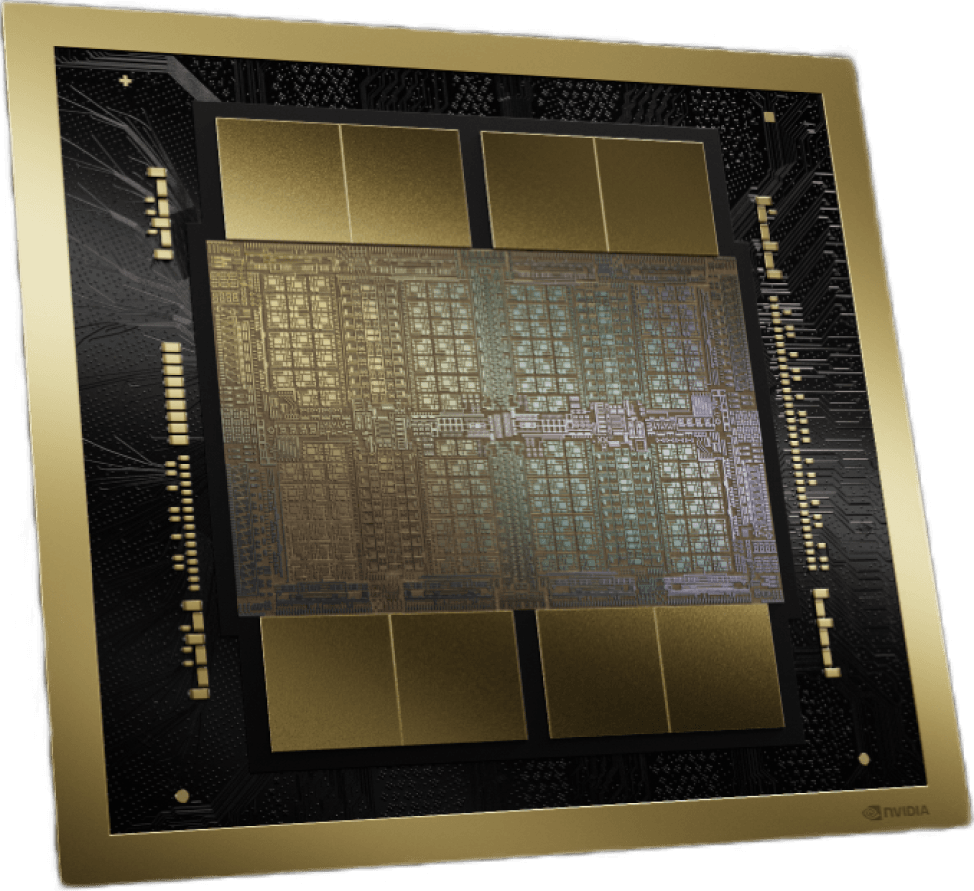

The NVIDIA H200 is an ideal choice for large-scale AI applications. It uses the NVIDIA Hopper architecture that combines advanced features and capabilities, accelerating AI training and inference on larger models.

The NVIDIA H200 is perfect for a wide range of workloads

Deploying AI based workloads on CUDO Compute is easy and cost-effective. Follow our AI related tutorials.

Available at the most cost-effective pricing

Launch your AI products faster with on-demand GPUs and a global network of data center partners

Virtual machines

The ideal deployment strategy for AI workloads with a H200.

- Up to 8 GPUs / virtual machine

- Flexible

- Network attached storage

- Private networks

- Security groups

- Images

Pricing available on request

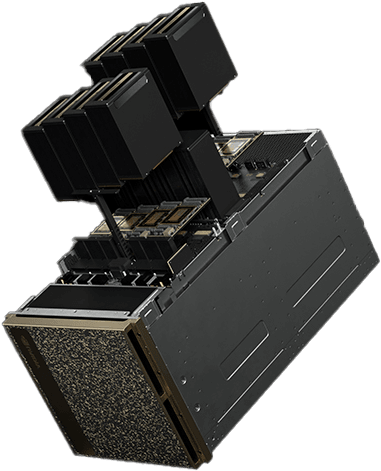

Bare metal

Complete control over a physical machine for more control.

- Powered by renewable energy

- Up to 8 GPUs / host

- No noisy neighbors

- SpectrumX local networking

- 300Gbps external connectivity

- NVMe SSD storage

from $23.29/hr

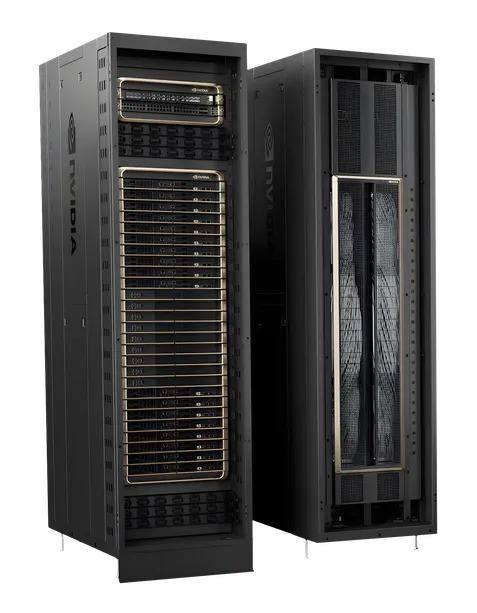

Enterprise

We offer a range of solutions for enterprise customers.

- Powerful GPU clusters

- Scalable data center colocation

- Large quantities of GPUs and hardware

- Optimize to your requirements

- Expert installation

- Scale as your demand grows

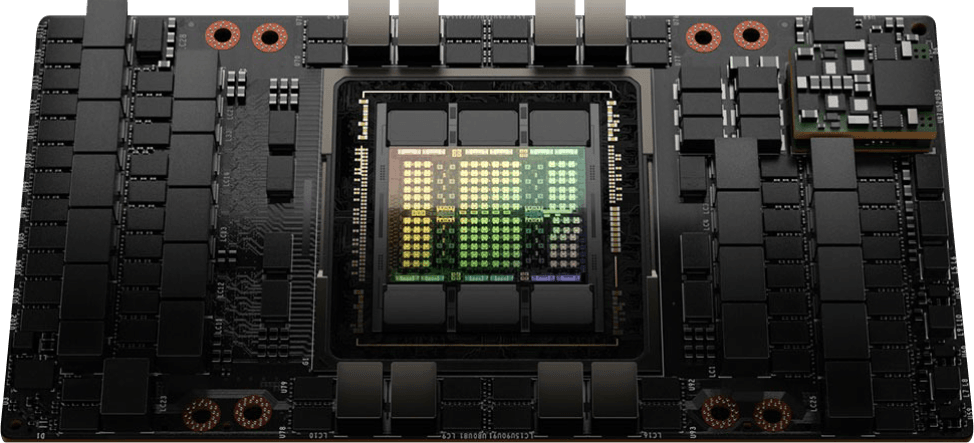

Specifications

Browse specifications for the NVIDIA H200 GPU

| Starting from | Contact us for pricing |

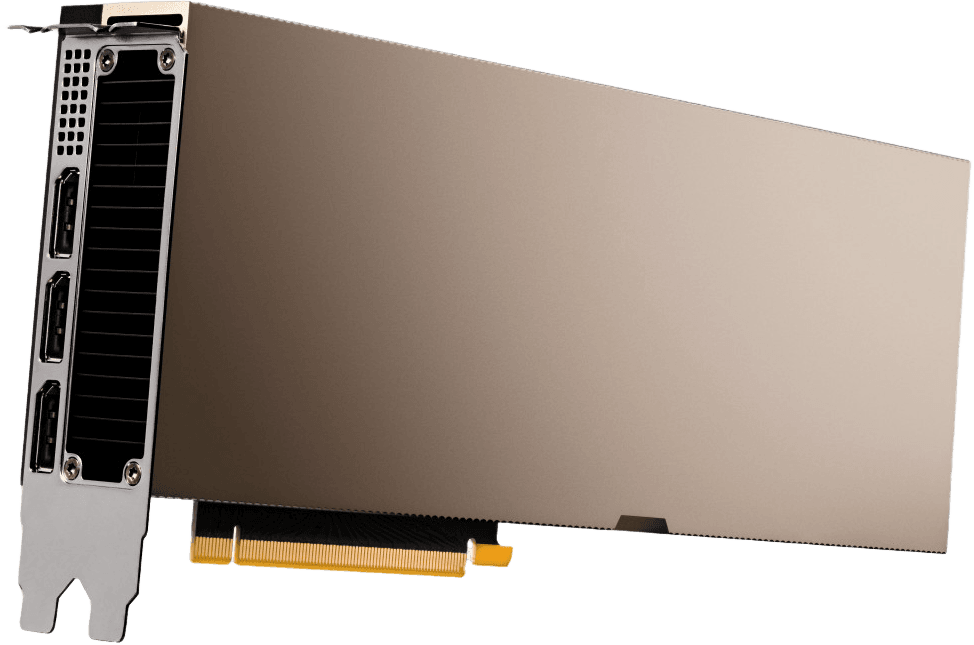

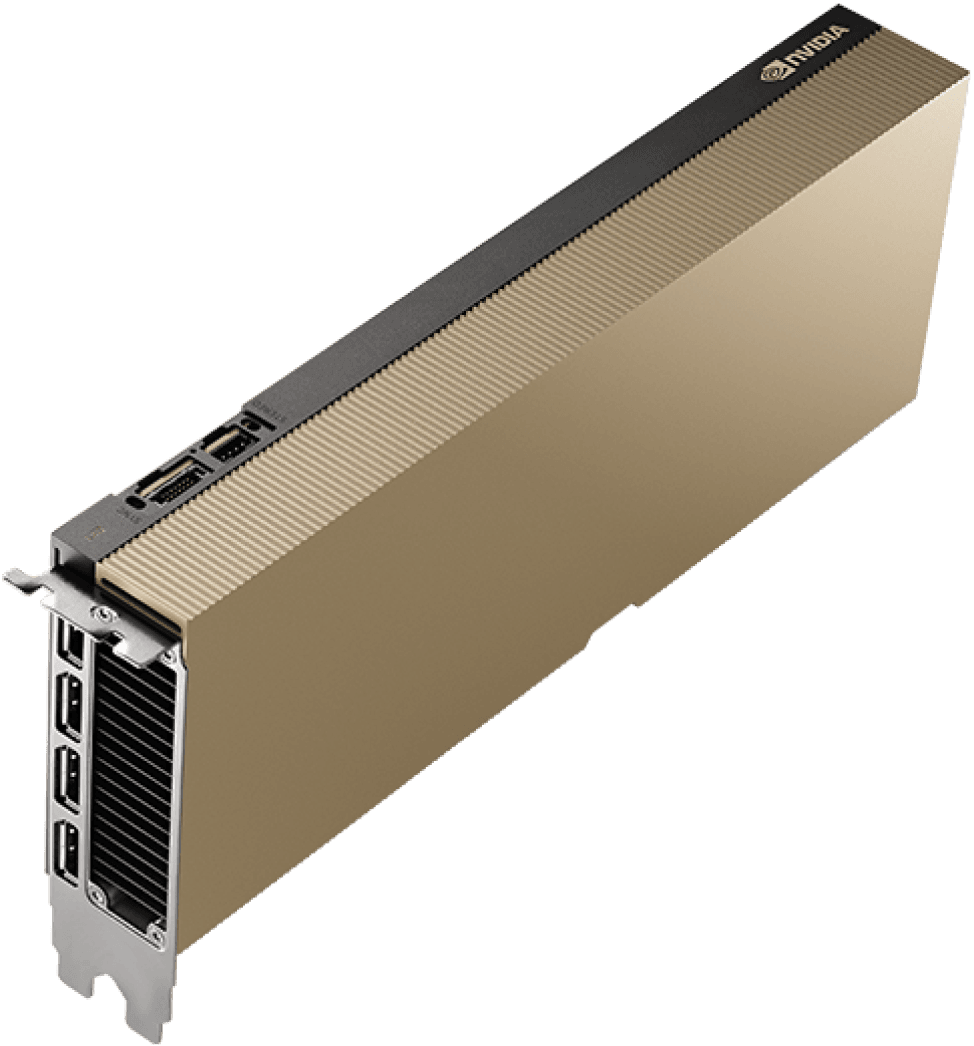

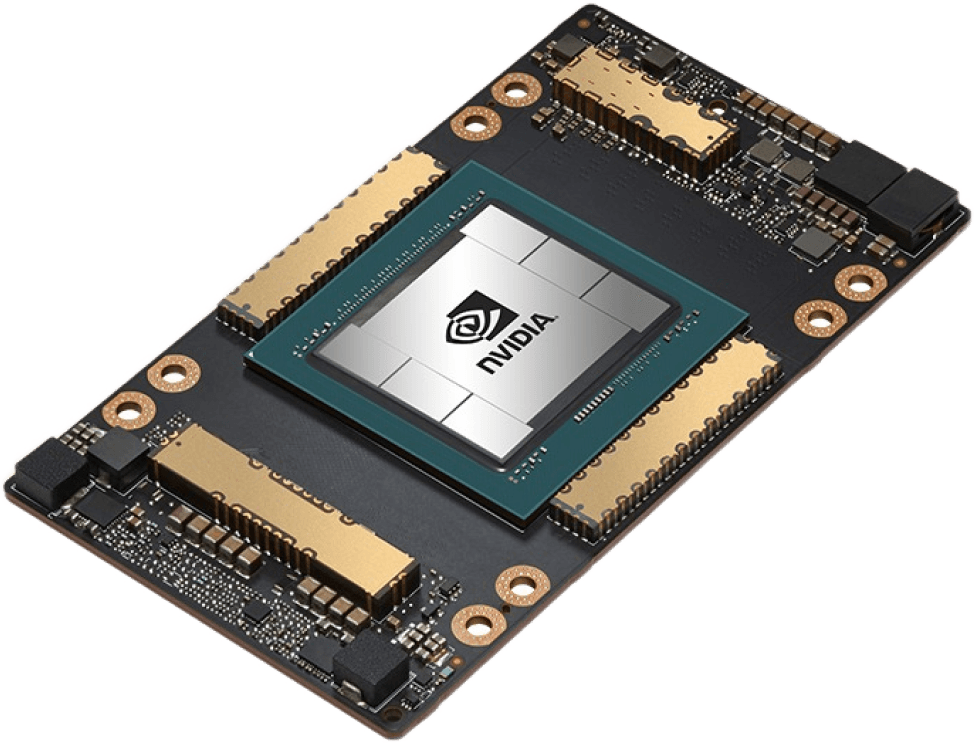

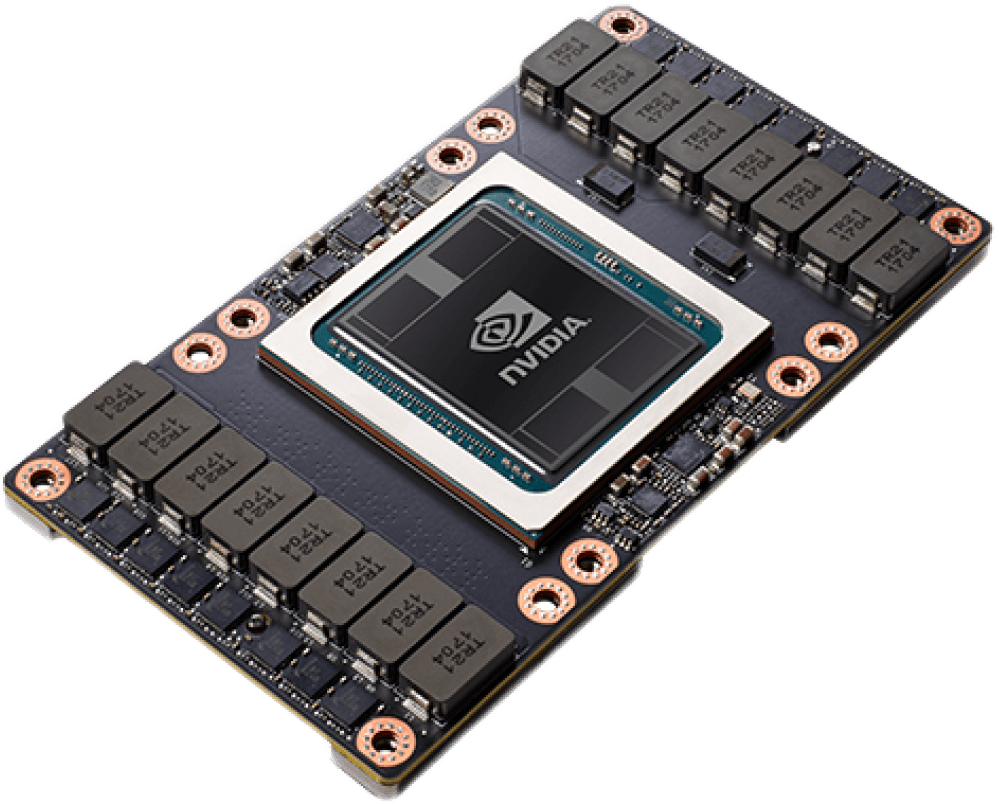

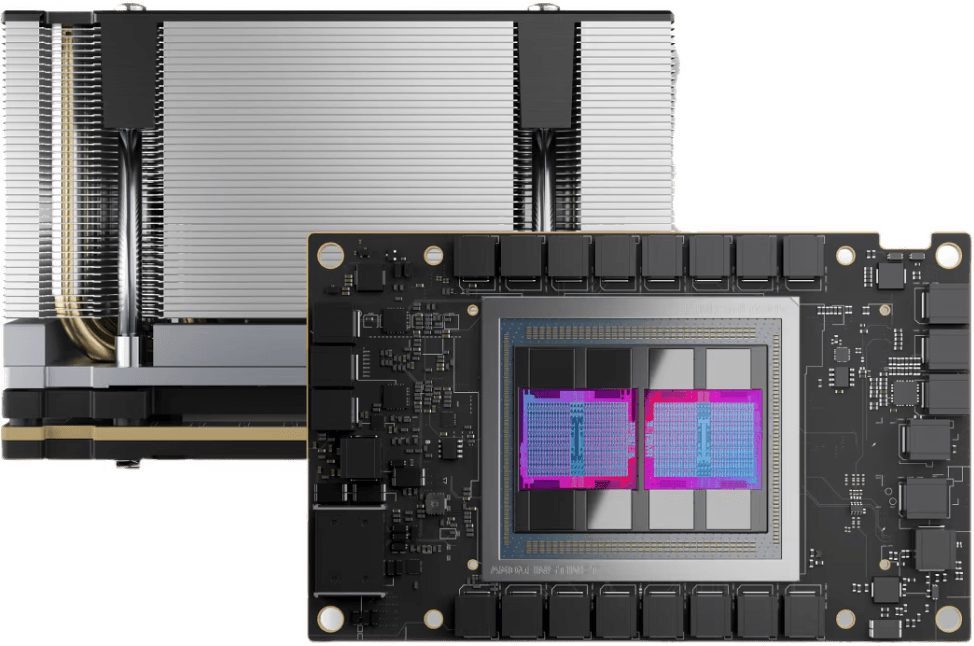

| Architecture | NVIDIA Hopper |

| Form factor | SXM |

| FP64 tensor core | 34 TFLOPS |

| FP32 | 67 TFLOPS |

| FP32 tensor core | 67 TFLOPS |

| BFLOAT16 tensor core | 1,979 TFLOPS |

| FP16 tensor core | 1,979 TFLOPS |

| FP8 tensor core | 3,958 TFLOPS |

| INT8 tensor core | 3,958 TOPS |

| GPU memory | 141GB |

| GPU memory bandwidth | 4.8 TB/s |

| Decoders | 7x NVDEC 7x NVJPEG |

| Max thermal design power (TDP) | Up to 700W |

| Multi-instance GPUs (MIG) | Up to 7 MIGs @16.5GB each |

| Interconnect | NVLink: 900GB/s PCIe Gen5: 128GB/s |

Ideal uses cases for the NVIDIA H200 GPU

Explore uses cases for the NVIDIA H200 including AI inference, Deep learning, High-performance computing.

AI inference

AI developers can utilize the NVIDIA H200 to accelerate AI inference workloads, such as image and speech recognition, at lightning speed. The H200 GPU’s powerful Tensor Cores enable it to quickly process large amounts of data, making it perfect for real-time inference applications.

Deep learning

The NVIDIA H200 empowers data scientists and researchers to achieve groundbreaking milestones in deep learning. Its massive memory and processing power guarantee significantly reduced training and deployment times for complex, large-scale models and enables model training on significantly larger datasets.

High-performance computing

From complex scientific simulations to weather forecasting and intricate financial modelling, the H200 empowers diverse organizations to accelerate high-performance computing tasks. Its unmatched memory bandwidth and processing capabilities ensure smooth operation for workloads of any scale, allowing you to achieve unmatched results faster than ever.

Browse alternative GPU solutions for your workloads

Access a wide range of performant NVIDIA and AMD GPUs to accelerate your AI, ML & HPC workloads

Trusted by NVIDIA. Built for you.

As a preferred partner, we offer NVIDIA’s most advanced GPUs with tested infrastructure, ready for AI, HPC and demanding workloads.

Also trusted by our other key partners:

Frequently asked questions

Talk to sales

Reserve GPUs. Access a H200 GPU Cloud alongside other high performance models for as long as you need it.

Deployment & scaling. Seamless deployment alongside expert installation, ready to scale as your demands grow.

"CUDO Compute is a true pioneer in aggregating the world's cloud in a sustainable way, enabling service providers like us to integrate with ease"

VPS AI

Loading GPU resource form...

Get started today or speak with an expert...

Available Mon-Fri 9am-5pm UK time