NVIDIAGB200 NVL72

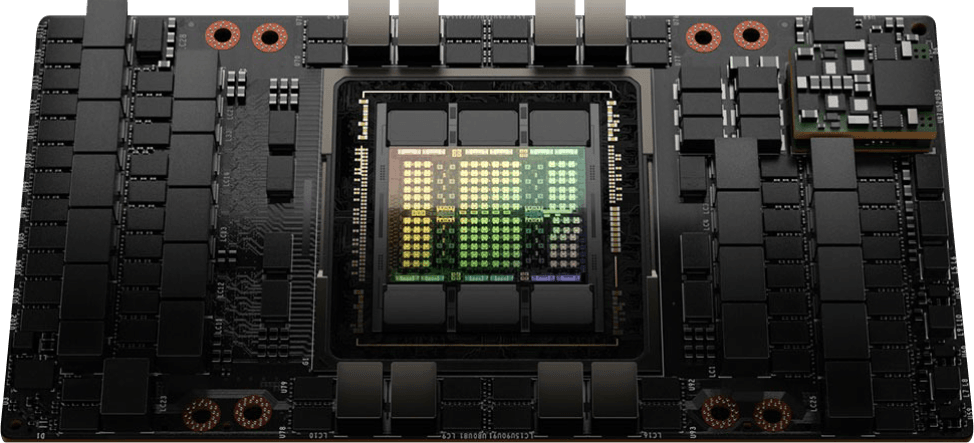

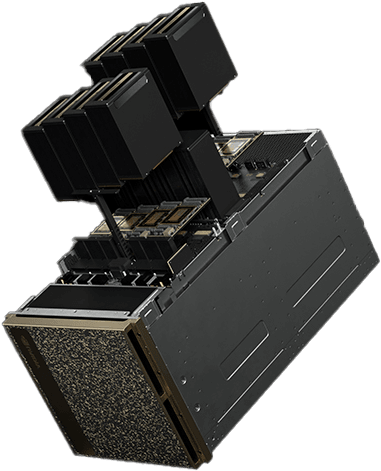

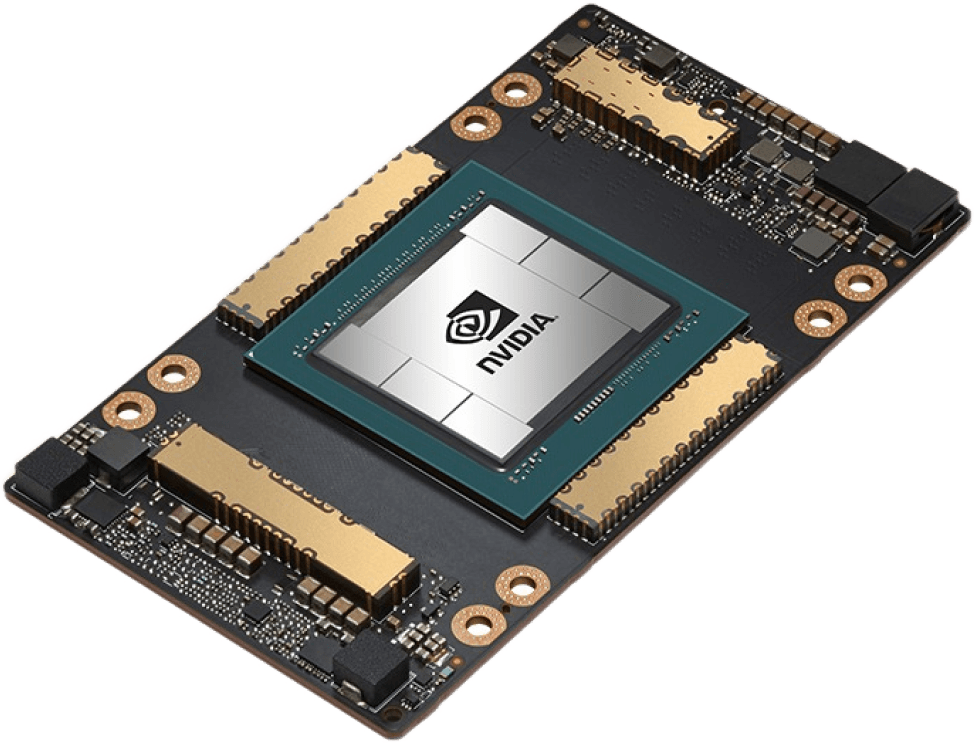

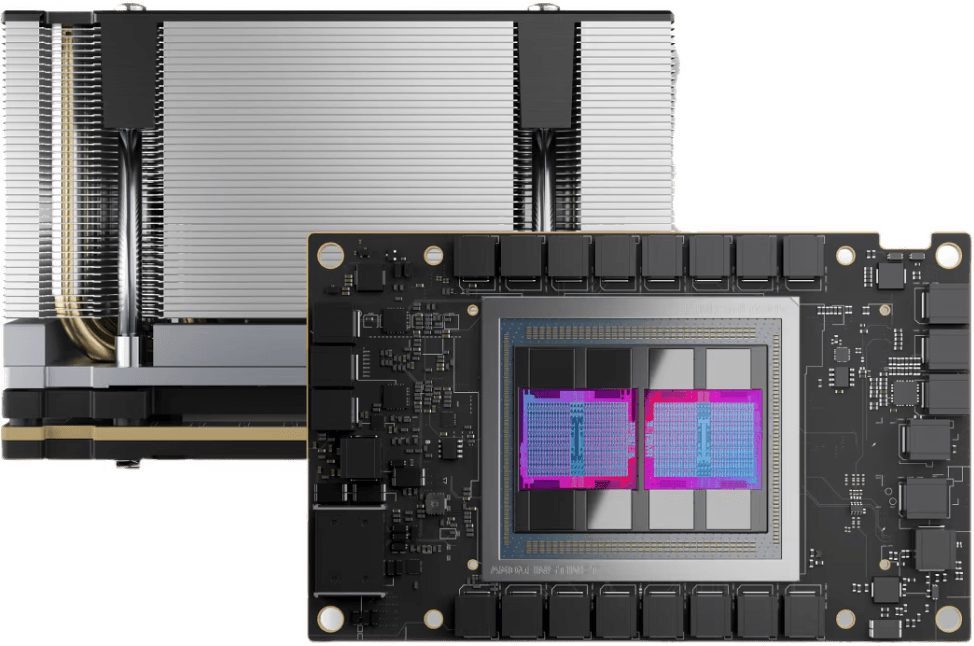

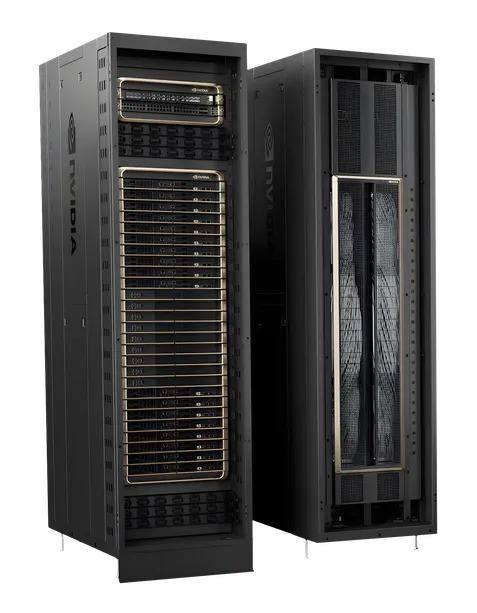

GB200 NVL72 connects 36 Grace CPUs and 72 Blackwell GPUs in a rack-scale design. The GB200 NVL72 is a liquid-cooled, rack-scale solution that boasts a 72-GPU NVLink domain that acts as a single massive GPU and delivers 30X faster real-time trillion-parameter LLM inference.

The NVIDIA GB200 NVL72 is perfect for a wide range of workloads

Deploying AI based workloads on CUDO Compute is easy and cost-effective. Follow our AI related tutorials.

Available at the most cost-effective pricing

Launch your AI products faster with on-demand GPUs and a global network of data center partners

Bare metal

Complete control over a physical machine for more control.

- Powered by renewable energy

- No noisy neighbors

- SpectrumX local networking

- 300Gbps external connectivity

- NVMe SSD storage

Pricing available on request

Enterprise

We offer a range of solutions for enterprise customers.

- Powerful GPU clusters

- Scalable data center colocation

- Large quantities of GPUs and hardware

- Optimize to your requirements

- Expert installation

- Scale as your demand grows

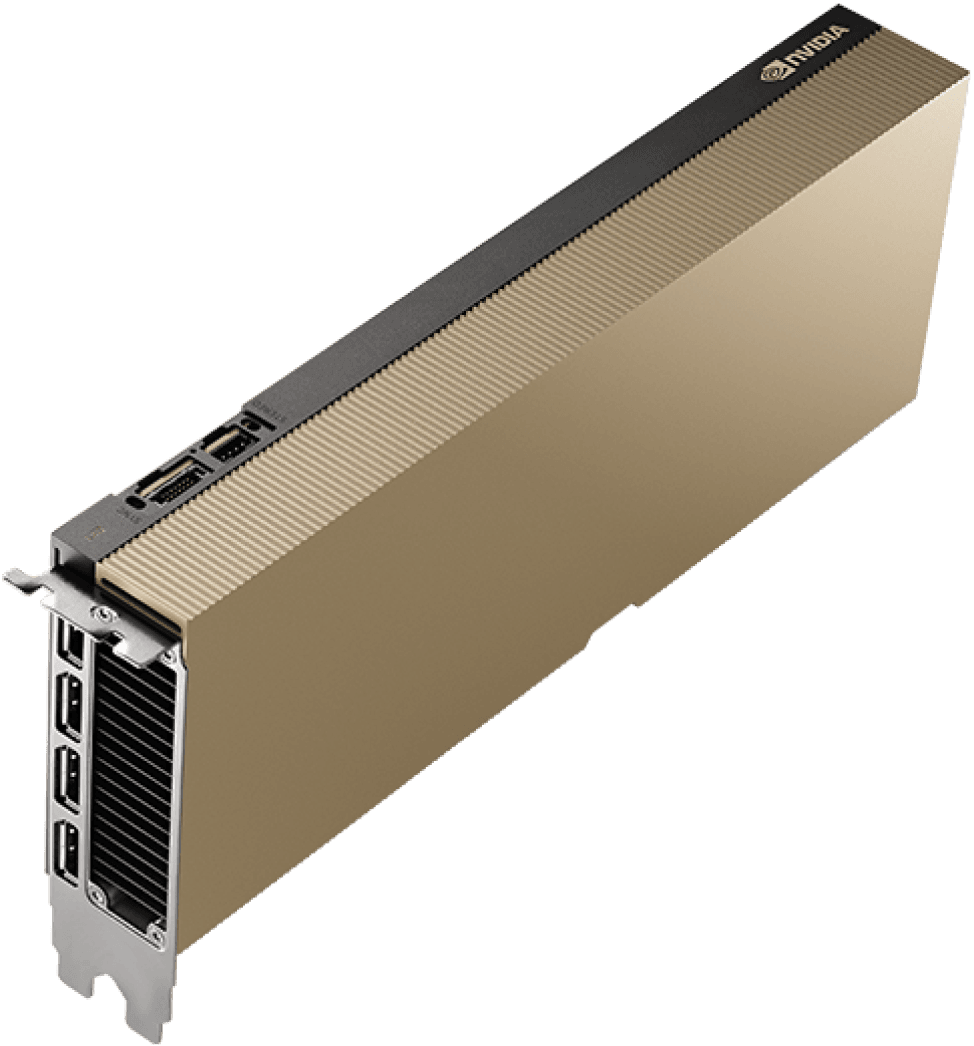

Specifications

Browse specifications for the NVIDIA GB200 NVL72 GPU

| Starting from | Contact us for pricing |

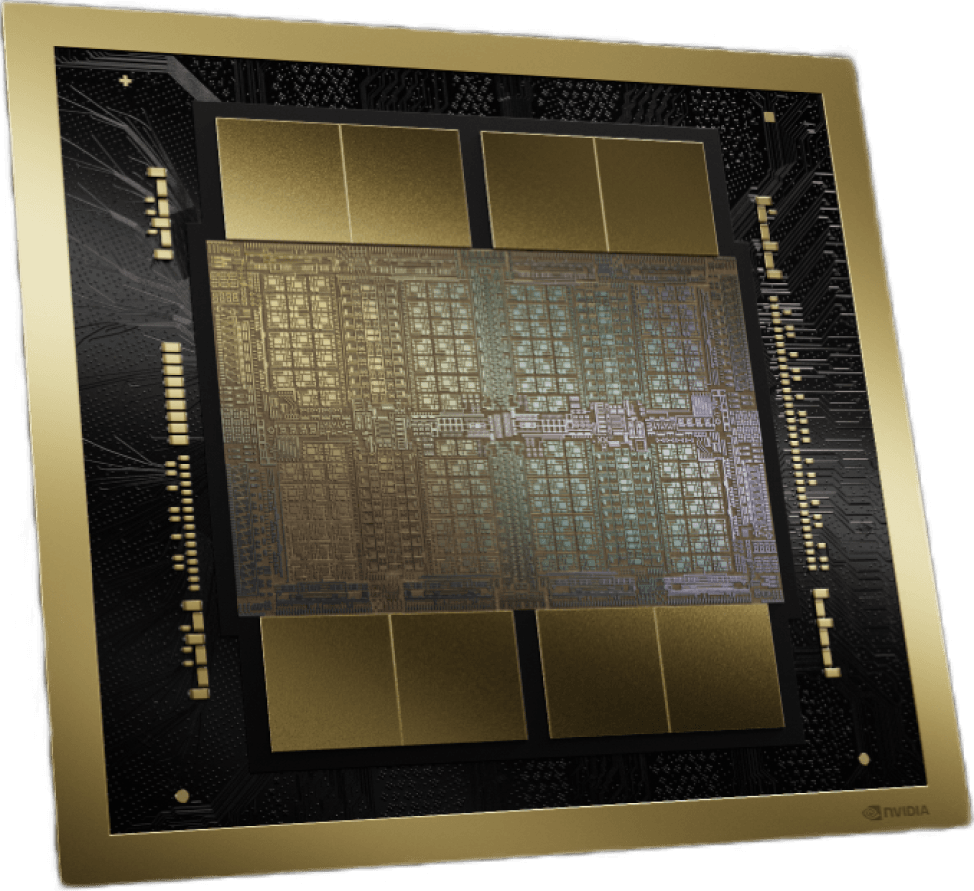

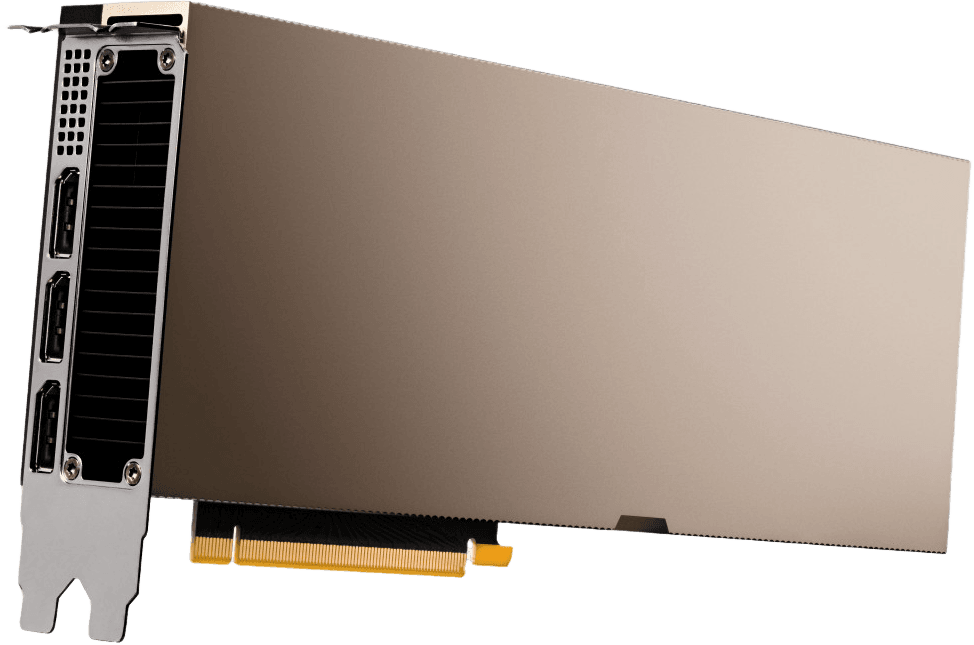

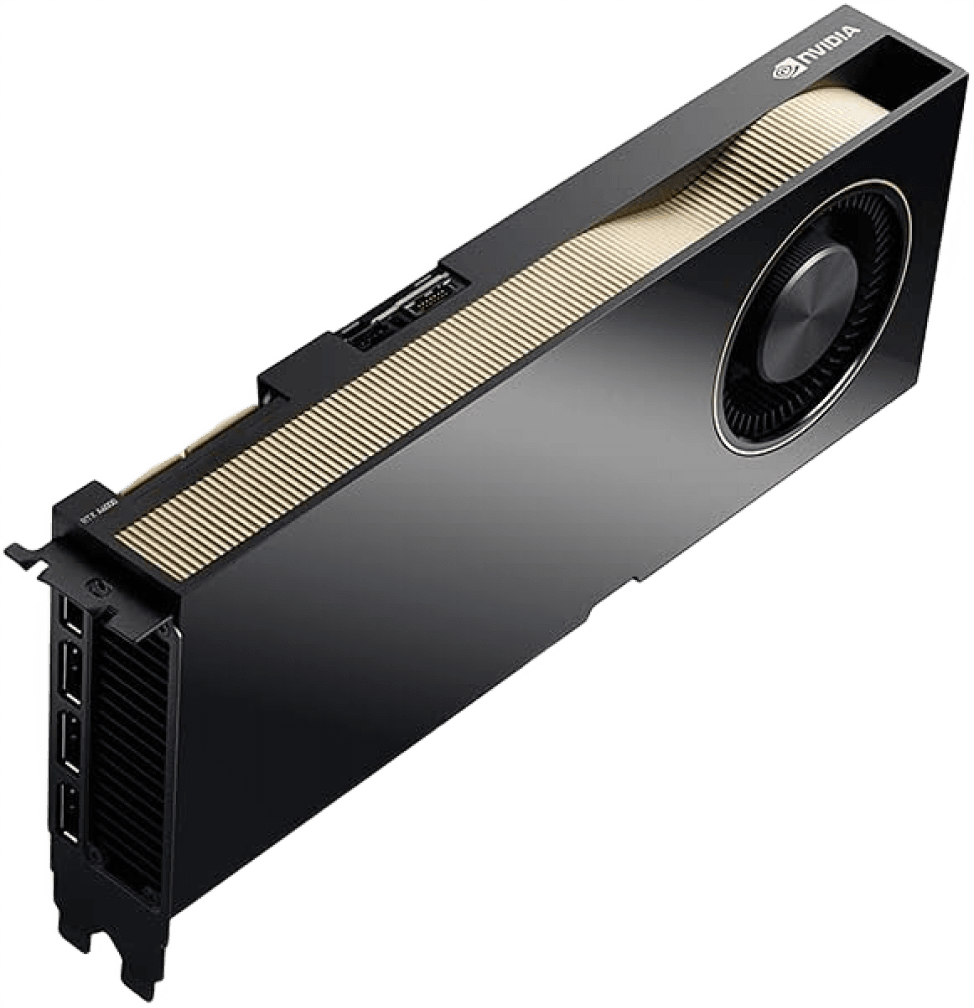

| Architecture | NVIDIA Blackwell |

| Configuration | 36 Grace CPU : 72 Blackwell GPUs |

| FP4 Tensor Core | 1,440 PFLOPS |

| FP8/FP6 Tensor Core | 720 PFLOPS |

| INT8 Tensor Core | 720 POPS |

| FP16/BF16 Tensor Core | 360 PFLOPS |

| TF32 Tensor Core | 180 PFLOPS |

| FP32 | 6,480 TFLOPS |

| FP64 | 3,240 TFLOPS |

| FP64 Tensor Core | 3,240 TFLOPS |

| GPU Memory | Bandwidth | Up to 13.5 TB HBM3e | 576 TB/s |

| NVLink Bandwidth | 130TB/s |

| CPU Core Count | 2,592 Arm® Neoverse V2 cores |

| CPU Memory | Bandwidth | Up to 17 TB LPDDR5X | Up to 18.4 TB/s |

Ideal uses cases for the NVIDIA GB200 NVL72 GPU

Explore uses cases for the NVIDIA GB200 NVL72 including Supercharging next-generation AI and accelerated computing, Energy-efficient infrastructure, Massive-scale training.

Supercharging next-generation AI and accelerated computing

GB200 NVL72 introduces cutting-edge capabilities and a second-generation Transformer Engine which enables FP4 AI and when coupled with fifth-generation NVIDIA NVLink, delivers 30X faster real-time LLM inference performance for trillion-parameter language models.

Energy-efficient infrastructure

Liquid-cooled GB200 NVL72 racks reduce a data center’s carbon footprint and energy consumption. Liquid cooling increases compute density, reduces the amount of floor space used, and facilitates high-bandwidth, low-latency GPU communication with large NVLink domain architectures.

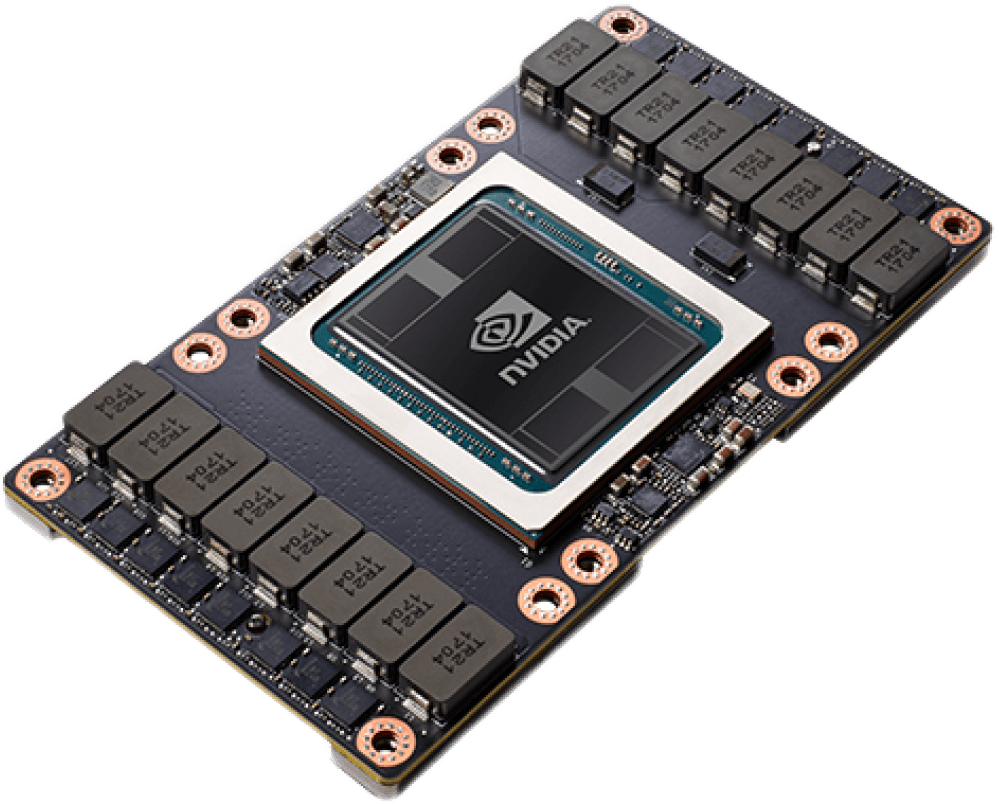

Massive-scale training

GB200 NVL72 includes a faster second-generation Transformer Engine featuring FP8 precision, enabling a remarkable 4X faster training for large language models at scale.

Browse alternative GPU solutions for your workloads

Access a wide range of performant NVIDIA and AMD GPUs to accelerate your AI, ML & HPC workloads

Trusted by NVIDIA. Built for you.

As a preferred partner, we offer NVIDIA’s most advanced GPUs with tested infrastructure, ready for AI, HPC and demanding workloads.

Also trusted by our other key partners:

Talk to sales

Reserve GPUs. Access a GB200 NVL72 GPU Cloud alongside other high performance models for as long as you need it.

Deployment & scaling. Seamless deployment alongside expert installation, ready to scale as your demands grow.

"CUDO Compute is a true pioneer in aggregating the world's cloud in a sustainable way, enabling service providers like us to integrate with ease"

VPS AI

Loading GPU resource form...

Get started today or speak with an expert...

Available Mon-Fri 9am-5pm UK time