The surge of generative AI and other demanding computational applications has fundamentally reshaped enterprise computing, and businesses are increasingly turning to GPUs to overcome the associated challenges of building and scaling AI applications.

Two choices for building AI solutions are GPU clusters and bare metal servers. Though both deliver high performance, their ideal use cases vary greatly. Making informed decisions requires understanding their core strengths, limitations, cost, scalability, and flexibility.

Considering that 94% of enterprises experience avoidable cloud expenses due to issues like resource underutilization and overprovisioning, choosing the right infrastructure is not just about performance but financial prudence. In this article, we will discuss GPU clusters and bare metal servers, providing actionable insights to help you select the infrastructure that aligns perfectly with your specific workloads and budget.

Difference between GPU clusters and bare metal servers

GPU clusters and bare metal servers serve different purposes, especially when used for AI and HPC tasks, and understanding these distinctions helps in selecting the right infrastructure for specific computational requirements. To understand their differences, let’s begin by discussing what GPU clusters are.

What is a GPU cluster?

A GPU cluster is a distributed computing system designed for massive parallel workloads, primarily using the GPUs’ parallel processing capabilities. We've discussed this in previous articles, but here is a brief rundown of what that means and how it works.

GPUs can process multiple tasks simultaneously because they have thousands of cores. Workloads are broken down into smaller tasks that are distributed across these cores for parallel execution, typically using GPU programming frameworks such as CUDA (for NVIDIA GPUs), OpenCL, or HIP (for AMD GPUs).

Nodes are interconnected through an InfiniBand HDR network. Source: Paper

Nodes are interconnected through an InfiniBand HDR network. Source: Paper

A GPU cluster comprises multiple compute nodes, each equipped with one or more GPUs. These nodes communicate through a high-bandwidth, low-latency interconnect, commonly InfiniBand or high-speed Ethernet. These interconnects often use protocols such as remote direct memory access (RDMA), which allows nodes to access each other's memory directly, bypassing the CPU and significantly reducing overhead.

The performance and efficiency of inter-node communication are heavily dependent on the chosen network fabric. Given the huge volume of data processed by GPUs, the interconnect needs to provide extremely high bandwidth and minimal latency to ensure fast data transfer between nodes.

For managing large-scale parallelism across multiple GPUs or multiple nodes in clusters, distributed computing frameworks such as the message passing interface (MPI) are commonly used. MPI facilitates effective workload distribution and communication across the cluster, ensuring efficient parallel execution.

What is a bare metal server?

A bare metal server is a dedicated physical server provided exclusively to a single user or tenant, without any virtualization layers or hypervisors between the hardware and the user's applications. Unlike virtual servers or cloud instances, which share physical resources among multiple tenants, a bare metal server gives users complete control and direct access to the underlying hardware.

Virtualization layers or hypervisors are software solutions that create multiple virtual machines (VMs) or instances on a single physical server. These layers enable the hardware resources to be shared among multiple users or workloads simultaneously.

Hypervisors, also called virtual machine managers, are specialized software tools like VMware ESXi, Microsoft Hyper-V, or KVM that divide a single physical server into multiple isolated environments known as virtual machines (VMs). Each VM can independently run its own operating system and applications as though it were a separate physical server.

For example, if you have a physical server equipped with 32 CPU cores, 256 GB RAM, and 2 TB of storage. Instead of dedicating this entire hardware configuration to a single workload, a hypervisor like VMware ESXi could partition the resources into several smaller virtual machines:

- VM Type 1: 8 CPU cores, 64 GB RAM, running Windows Server to host business applications.

- VM Type 2: 16 CPU cores, 128 GB RAM, running Ubuntu Linux to support a database.

- VM Type 1 (Container): 4 CPU cores, 32 GB RAM, running CentOS Linux as a web server.

- VM 2 (Container): 4 CPU cores, 32 GB RAM, running Windows 11 as a virtual desktop environment.

The general architecture of containers, virtual machines, and their combination. Source Paper

The general architecture of containers, virtual machines, and their combination. Source Paper

Each VM runs independently, unaware that it shares hardware with other VMs. The hypervisor allocates resources dynamically and securely isolates each environment, preventing one VM's activities or issues from affecting others.

Since bare metal servers do not use hypervisors, they remove the complexity associated with virtualization, resulting in higher performance, lower latency, and more predictable resource availability, making them ideal for resource-intensive applications.

Bare metal servers also provide greater flexibility in hardware configuration, allowing users to select specific CPU types, RAM capacity, GPU acceleration, and storage configurations tailored precisely to their workloads. Additionally, their dedicated nature enhances security, compliance, and isolation since the entire server is under the exclusive control of a single organization or user.

GPU clusters vs. bare metal servers properties comparison

There are a lot of factors that determine which of these solutions suits your organization best. To help you get the best out of your cloud spending, let’s discuss the properties of GPU clusters and bare metal servers.

Computational performance

- GPU clusters: GPU clusters are great for handling tasks requiring a lot of simultaneous calculations, like training AI models. Think of it as having many powerful workers tackling different parts of a large project at the same time.

For instance, when training a deep neural network, a core operation we have to carry out is multiplying huge tables of numbers (matrices). A GPU cluster splits this massive calculation into smaller pieces, assigning each piece to a different GPU, which significantly speeds up the training, delivering results much faster than a single computer could.

- Bare metal servers: Bare metal servers offer dedicated hardware resources, providing consistent, predictable performance and low latency. They are entire servers that are built and can have different configurations.

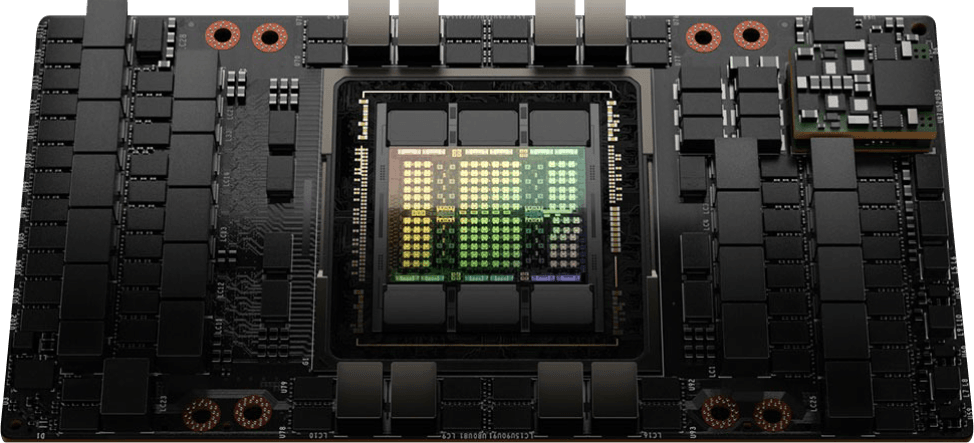

They can be used for sequential tasks and applications demanding direct hardware access, such as high-frequency trading where minute latency variations are critical, but they can also be used for training AIs if the configurations are built for such. For example, CUDO Compute offers GPU-based bare metal servers that are outfitted with NVIDIA GPUs, like 8 NVIDIA HGX SXM5 H100, specifically for AI and HPC tasks.

Latency and responsiveness

- GPU clusters: While GPU clusters provide immense computational power, they introduce network latency due to inter-node communication. Data must be transferred between nodes over the network, which can introduce delays.

However, modern interconnect technologies like InfiniBand and NVLink minimize this latency. NVLink, for example, provides high-bandwidth, low-latency communication between GPUs within a single node or across multiple nodes.

The goal is to minimize the impact of network latency on overall performance, ensuring that the benefits of parallel processing outweigh the overhead of network communication.

- Bare metal servers: Bare metal servers offer significantly reduced latency due to direct access to local memory, storage, and other hardware components as applications communicate directly with the hardware, eliminating the overhead network communication.

Resource control and isolation

- GPU clusters: GPU clusters provide distributed resource allocation, managed by orchestration software like Kubernetes, Slurm, or Apache Mesos, since these tools manage the allocation of GPUs, network resources, and storage, ensuring efficient utilization of the cluster.

- Bare metal servers: Bare metal servers offer single-tenant hardware access, providing complete resource isolation and control. Users have exclusive access to the physical server, ensuring that their workloads are isolated from other users. This is crucial for applications that require high security and compliance, as it ensures that sensitive data is protected.

Scalability

Scaling, in computing, refers to the ability of a system to handle increased workloads. When demand rises, we need to add resources to maintain performance. There are two primary ways to do this:

- Horizontal scaling (Scaling out) involves adding more machines or nodes to the system. It is like expanding your workforce by hiring more people.

- Vertical scaling (Scaling up) involves increasing the capabilities of an existing machine. Think of it as giving your current workers more powerful tools or enhancing their skills.

Let's break down the concepts of horizontal and vertical scaling in the context of GPU clusters and bare metal servers:

- GPU clusters: Offers exceptional horizontal scalability. When you need more processing power, you add more nodes (each with multiple GPUs) to the cluster. For many parallel workloads, the performance increase is almost linear—doubling the nodes nearly doubles the performance.

Such a level of horizontal scalability makes GPU clusters highly adaptable to rapidly growing demands, and there are 3 main advantages to this:

The first is Elasticity, so you can easily adjust capacity as needed. The second is reliability and fault tolerance, so if one node fails, others can take over. Finally, it is often cost-effective due to the linearity of performance gains.

The best part is that when using GPU clusters on CUDO Compute, we allow for dynamic scaling, so you can get more nodes when you need more compute resources and scale down when you need less. Contact us to learn more.

- Bare metal servers: Scaling with bare metal usually involves vertical resource upgrades—adding RAM, GPUs, or CPU capacity or provisioning additional dedicated servers from your cloud provider. While effective, this vertical scaling is less elastic and requires careful upfront planning.

| Feature | Horizontal Scaling (GPU Clusters) | Vertical Scaling (Bare Metal Servers) |

| Method | Adding more machines | Upgrading existing machines |

| Elasticity | High | Low |

| Fault tolerance | High | Low |

| Cost-effectiveness (large scale) | High | Lower |

Flexibility

- GPU clusters: Clusters inherently provide flexibility, supporting a wide range of frameworks and libraries, and can easily adapt to changing requirements via orchestration tools and virtualization technologies.

- Bare metal servers: While powerful, bare metal servers require deliberate hardware provisioning. Changing hardware setups frequently can be costly and time-consuming, making them less flexible than GPU clusters.

Now that you know the properties of GPU clusters and bare metal servers, the question would be how you can choose between them and what you ideally should use each for. Let’s discuss that next.

How to choose between GPU clusters and bare metal servers

Choosing between GPU clusters and bare metal servers hinges on clearly understanding your workload. GPU clusters are suited for training complex AI and machine learning models, offering powerful parallel processing capabilities. Bare metal servers, meanwhile, would be great at inference workloads and latency-sensitive tasks, delivering dedicated hardware resources and predictable performance.

Before diving deeper into technical specs or cost analyses, first evaluate your application’s specific requirements:

- Identify your compute needs

Begin by identifying your compute requirements. Ask whether your workload is highly parallel—ideal for GPU clusters performing intensive model training—or sequential, which might be better served by the predictable, low-latency execution provided by bare metal servers during inference. Remember that while some inference tasks can be parallelized, bare metal servers have dedicated GPUs to handle those efficiently.

- Assess your memory demands

Consider your memory requirements. Do you need a lot of high-bandwidth memory to manage large datasets, or are your needs relatively modest? If your workload demands substantial high-bandwidth memory, GPU clusters are generally the best option.

- Consider security and compliance

Security and regulatory compliance are also crucial. If your workload involves sensitive data, the dedicated, single-tenant environment provided by bare metal servers might offer superior isolation and adherence to regulatory standards.

- Examine scalability

Scalability is another important factor. GPU clusters provide dynamic scaling to accommodate fluctuations in workload, which is especially beneficial for evolving AI training projects. In contrast, bare metal servers, while less flexible, deliver stable performance tailored to consistent inference workloads.

- Factor in budget considerations

Finally, evaluate your budget comprehensively. Beyond the upfront costs, account for ongoing expenses such as maintenance, power consumption, staffing, and software licensing.

By systematically assessing these aspects, you can establish a clear, actionable framework for selecting the infrastructure solution that best matches your unique workload, optimizing both performance and cost efficiency.

Whichever of these you choose, CUDO Compute’s got you covered. You can begin using our GPU clusters for AI training or our bare metal servers for inference tasks today. And if your requirements span both worlds, our dedicated enterprise solutions team can craft a flexible, hybrid approach tailored specifically to your unique needs. Contact us to learn more.

Learn more: LinkedIn , Twitter , YouTube , Get in touch .

Starting from $2.15/hr

NVIDIA H100's are now available on-demand

A cost-effective option for AI, VFX and HPC workloads. Prices starting from $2.15/hr

Subscribe to our Newsletter

Subscribe to the CUDO Compute Newsletter to get the latest product news, updates and insights.