We're living in an age of algorithms. AI is everywhere, shaping our experiences in ways both big and small. It recommends movies to watch, songs to listen to, and sometimes even whether we can get a loan or even predicts the likelihood of recidivism for individuals in the criminal justice system. It's incredible to think about. But here's the thing: while AI can recommend books and drive cars, we often don't know how it makes its decisions.

Former Google CEO Eric Schmidt recently discussed this: When we build AIs, we simply create algorithms that we feed data to. The AIs take it from there, making decisions and reaching conclusions that we don’t fully understand—a phenomenon known as the black-box problem. This lack of transparency can lead to serious consequences, such as perpetuating biases in loan applications or leading to wrongful convictions based on flawed risk assessments.

Life cycle of a DNN application. Source: Paper

While AI has the potential to do incredible things, if we don't understand how it works, how can we truly trust it? This is why we need explainable AIs (XAIs). XAIs offer a way to shed light on these black boxes, allowing us to understand the reasoning behind AI's decisions and ensure fairness, accountability, and trust. In this article, we’ll discuss XAIs, what they are, how they work, and how to build them. Let’s begin by understanding what the black-box problem is.

What is the black-box problem?

Imagine a magic box. You put ingredients in, and something impressive comes out, but you have no idea what happened inside. That's what it's like with many AI systems today. We provide the input (data) and receive the output (a decision or prediction), but the process in between remains hidden, which is the essence of the black-box problem.

This "black box" refers to the complex internal workings of AI algorithms, especially deep learning models. These models consist of complex layers connected together to process information in ways that are often difficult for humans to comprehend.

Even the developers who build these AI systems may not fully understand how they arrive at specific conclusions. Imagine having a brilliant friend who gives excellent advice but can't explain their logic.

Why is this a problem? Here are a few reasons:

- Lack of trust: It's hard to trust when we don't understand how decisions are made, especially in high-stakes scenarios like healthcare or finance.

- Bias and fairness: Some training data contain hidden biases that can lead to unfair or discriminatory outcomes. Without transparency, these biases can be difficult to identify and address.

- Debugging and improvement: If an AI system makes an error, it's hard to pinpoint the cause without understanding its internal logic. This makes it challenging to debug and improve the system.

- Accountability: If an AI system causes harm, who is responsible? The lack of explainability makes it difficult to assign accountability.

The black box problem hinders AI's widespread adoption and ethical use, which is why explainable AI needs more attention. Opening the black box gives us insights into AI's decision-making processes, builds trust, and ensures that AI is used responsibly.

What is explainable AI?

Explainable AI (XAI) is a set of techniques and methods that allow us to understand how an AI system makes decisions. Unlike traditional AI models, XAIs try to give clear insights into how inputs are processed into outputs.

When building XAIs, there are a few things to try to achieve. The first is interpretability, the ability to describe how a model operates in simple terms that humans understand. The second is transparency, which means giving clear access to the data and features that influence the AI’s decisions.

Lastly, XAIs should be able to operate justifiably, making sure that their decisions can be logically explained and ethically defended. All of these are what we can’t get from a lot of current AI models.

Think of it like this: if a doctor diagnoses you with an illness, you'd want to understand the reasoning behind their diagnosis. You'd like to know what symptoms they observed, what tests they ran, and how they interpreted the results. XAI provides that same level of transparency for AI systems.

There are different ways to build an XAI, ranging from simple rule-based explanations to complex visualizations of the AI's internal workings. Some techniques focus on explaining individual predictions, while others try to give a more general understanding of the model's behavior.

In the next section, we'll delve deeper into some of the common XAI techniques and how they work.

Common techniques to build explainable AIs

Depending on the model type and application, there are a few ways to achieve explainability in AI. Let’s outline some approaches to building an XAI:

1. Model-intrinsic explainability:

There are AI models whose results are naturally easy to interpret because their architecture is simple. These models allow us to understand the reasoning behind their predictions without additional techniques. Two examples are:

- Linear regression: Linear regression models show how each feature impacts the outcome. So, you can directly see which features have a positive or negative effect and the strength of that effect.

- Decision trees: Decision trees show you a clear path from inputs to outputs, making it easy to trace the model’s decisions. You can follow the tree's branches to understand how it arrived at a particular decision.

2. Post-hoc explainability:

For models that are not naturally easy to interpret, you can use post-hoc methods to explain the decisions made by the complex models, (like deep neural networks) after it has made the decision. There are a few ways we can do this:

- Local interpretable model-agnostic explanations (LIME): LIME helps you understand the "why" behind a single prediction made by a complicated model. It does this by creating a simplified explanation that's easy to grasp, focusing only on the specific situation you're interested in. It helps you figure out which parts of the input had the biggest influence on the models' output for that specific case.

Example explanation of an image of an 8 that was misclassified as 3. Top row: The explanatory power curve shows the relative explanatory power as a function of the explanation complexity (here, the mean number of active pixels in the explanation). The dot shows the complexity chosen for the shown explanation (expl. power of 0.8). Mean explanation gives the posterior mean of the projected explanations and variance gives the uncertainty in the explanation. Second row: Explanations with different complexities and relative explanatory powers. Bottom two rows: Individual posterior samples of explanations. Source: Paper

- Shapley additive explanations (SHAP): SHAP is a method for understanding how much each feature in a model contributes to its final prediction. It does this by considering all possible combinations of features and figuring out how much each feature impacts the overall outcome.

For example, Imagine you have a team that wins a game. SHAP helps you figure out how much each player contributed to the victory. It's like fairly dividing the credit among all the players based on their individual actions and how they influenced the outcome of the game. SHAP considers all possible combinations of players and how the team's performance would change if each player was missing.

- Feature importance analysis: Feature importance analysis highlights the most influential features driving a model’s decisions. There are different methods of Feature importance analysis, like permutation feature importance, gain in information (used in decision trees), and coefficient magnitudes (used in linear models).

Let’s briefly explain them:

- Permutation feature importance: This method checks the impact of shuffling a feature's values on the model's performance. If there is a significant drop in performance after shuffling, it indicates the importance of that feature.

- Gain in information: In decision tree models, the information gain measures how much a feature reduces uncertainty or improves the model's ability to make accurate predictions. Features with higher information gain are considered more important.

- Coefficient magnitudes: In linear models, the magnitude of the coefficients associated with each feature reflects its relative importance. Larger coefficients indicate a stronger influence of that feature on the model's predictions.

By understanding the relative importance of different features, you can gain insights into the underlying data, improve model interpretability, and simplify the model by removing less important features.

3. Visual explanations:

For models like convolutional neural networks (CNNs), which are usually used for image recognition, visualization techniques can point which parts of an input image influence the model’s prediction. There are a few ways you can do this:

- Saliency maps: Saliency maps highlight regions of an image most relevant to a model’s decision. They show which pixels in the image were most important for the model's prediction.

- Gradient-weighted Class Activation Mapping (Grad-CAM): Grad-CAM visualizes class-specific activations in an image. What that meanis, it creates a heatmap highlighting the regions of the image that are most important for a specific class.

4. Rule-based explanations:

Rule-based systems, like decision sets, are very interpretable because they provide simple explanations by directly mapping input features to output predictions through a set of straightforward rules. For example, a rule might state: "If age is greater than 30 and income is greater than 50,000, predict high risk." This explicit mapping makes the model's logic transparent and easy to understand.

Also, rule-based systems can be used alongside complex models to provide simplified explanations. By pulling rule-based explanations from a complex model, we can clarify its decision-making process into a set of human-readable rules, improving trust and aiding in debugging.

These rules can also be used to constrain the behavior of complex models by adding domain-specific knowledge or ethical considerations. This guarantees that the model sticks to these constraints while making predictions. For instance, in a loan application system, a rule could be added to prevent the model from denying a loan solely based on race or gender.

In essence, rule-based explanations improve the interpretability and trustworthiness of complex machine learning models. Combining complex models with the transparency of rule-based systems allows us to create more reliable and responsible AI.

Let’s give a practical example of how to build an XAi.

How to build an explainable AI

To build an explainable AI you have to use a few of the techniques we mentioned earlier throughout your project. Here's a practical example using a code we wrote. If you have followed our blogs, you’ll recognize that we simply updated a code we used in our article on feed-forward neural networks.

We’ve made the code into a convolutional neural network (CNN) to classify images of cats and dogs. So if you have built a model already, with these steps, you can make your model explainable.

Side note: We used a very limited dataset as this is simply for learning purposes.

Here is a practical example of how to build an XAI:

1. Choose an interpretable model (or enhance a complex one):

For simple tasks, you can use an interpretable model like a decision tree, which will give you direct insights into the decision-making process.

model = Sequential([

# Convolutional base

Conv2D(32, (3, 3), activation='relu', input_shape=(128, 128, 3)),

MaxPooling2D(pool_size=(2, 2)),

# ... other layers ...

Dense(2, activation='softmax') # Output layer

])

In our code (above), we're using a CNN, which is not naturally interpretable. As you’ll see later, we applied a post-hoc technique using SHAP to explain our model’s predictions.

2. Train the Model:

Next, we trained the CNN using labeled images of cats and dogs. The code includes steps for data loading, preprocessing, augmentation, and model training.

# Data augmentation

datagen = ImageDataGenerator(

rotation_range=20,

# ... other augmentations ...

)

# Train the model

history = model.fit(

# ... some parameters ...

epochs=10,

# ... other parameters ...

)

3. Apply XAI Techniques:

We then used SHAP to explain the model's predictions. SHAP values show how much each feature contributes to the prediction. We visualized the explanations and highlighted the areas of the image that are most important for the model's prediction.

4. Evaluate and refine:

Analyze the explanations generated by SHAP. Do they make sense in the context of the problem? Are there any unexpected biases or inconsistencies? Use these insights to refine the model, the training data, or the XAI techniques themselves.

There are a few things you need to take into account when deciding building your XAI models:

- Explainability needs: Determine what type of explanations are needed for your specific application and audience. Do you need to understand individual predictions or the overall model behavior?

- Computational cost: Some XAI techniques can be computationally expensive. Consider the trade-off between explainability and efficiency.

- Human-centered design: Present the explanations in a way that is understandable and useful for the intended audience. This may involve visualizations, natural language explanations, or interactive tools.

By following these steps and carefully considering the factors involved, you can build AI systems that are both powerful and explainable, fostering trust and enabling responsible use. To see the entire code, check out the GitHub repository.

Conclusion

We need to know why an AI system made a certain decision, especially if it affects us. XAI can help identify and remove biases in AI systems, and if something goes wrong, XAI can help us figure out why and who is responsible. When we understand how AI works, we are more likely to trust it.

XAI is about making sure AI systems are not just smart but also understandable and trustworthy. It's about ensuring that we can understand why an AI system makes the decisions it does so we can be confident that those decisions are fair, responsible, and benefit everyone.

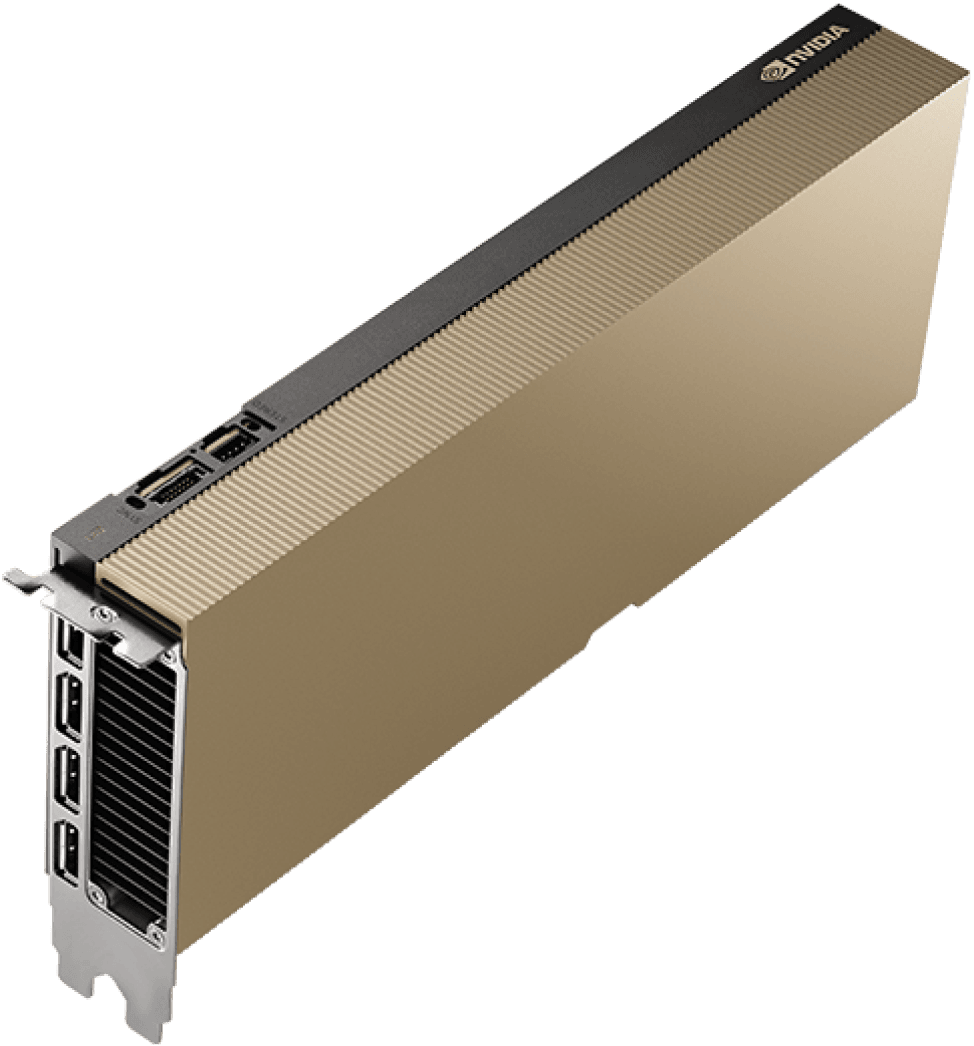

You can build your explainable AIs using CUDO Compute. We offer the latest NVIDIA GPUs, cloud tools and resources to help you build your models seamlessly. Get started today or contact us to learn more.

Learn more: LinkedIn , Twitter , YouTube , Get in touch .

Starting from $1.42/hr

NVIDIA L40S's are now available on-demand

A cost-effective option for AI, VFX and HPC workloads. Prices starting from $1.42/hr

Subscribe to our Newsletter

Subscribe to the CUDO Compute Newsletter to get the latest product news, updates and insights.