Predictive analytics is a branch of advanced data analytics that uses statistical methods, machine learning, and data mining techniques to predict future outcomes based on historical data. It allows organizations to anticipate trends, mitigate risks, and make data-driven decisions in a highly competitive and uncertain world.

From predicting customer behavior to optimizing resource allocation, predictive analytics is at the core of modern business intelligence and strategic planning.

In this article, we’ll explore predictive analytics in-depth, covering its fundamentals, methods, applications, and challenges while providing real-world examples to illustrate its transformative impact.

CUDO Compute provides the high-performance GPUs and scalable infrastructure you need for predictive analytics. Deploy your models effortlessly to optimize your business strategies today. Get started now.

What is predictive analytics?

Predictive analytics involves using data, statistical algorithms, and machine learning techniques to identify patterns and predict future events or behaviors. By analyzing past data, predictive models can estimate the likelihood of specific outcomes with a certain degree of confidence.

Source: Paper

Source: Paper

Predictive analytics relies on the identification of relationships and patterns within data. These patterns can be simple correlations or complex interactions between multiple variables. Once these patterns are identified, they can be used to build predictive models to forecast future outcomes based on new input data.

Key attributes of predictive analytics:

- Data-Driven: The accuracy and reliability of predictions directly depend on the quality, quantity, and relevance of the data used. Organizations need to invest in robust data collection and processing to ensure their predictive models are trained on reliable information.

- Future-Oriented: Predictive analytics allows organizations to anticipate trends, identify potential risks and opportunities, and make proactive, strategic decisions.

- Probabilistic: Predictive models provide estimates of the likelihood of different outcomes, acknowledging the inherent uncertainty in predicting the future. They offer a degree of confidence, not absolute certainty.

- Actionable: The insights from predictive analytics should be clear, relevant, and usable for informed decision-making and driving positive change. Organizations need to have processes in place to translate these insights into concrete actions.

- Iterative: Predictive models are continuously refined and improved as new data becomes available. Organizations need to establish a continuous learning cycle, regularly evaluating the model's performance and adjusting it as needed.

- Multidisciplinary: Predictive analytics draws upon statistics, machine learning, data mining, and domain expertise. Successful predictive analytics often requires collaboration between data scientists, domain experts, and business stakeholders.

These attributes highlight the power and versatility of predictive analytics in addressing complex problems and driving strategic decision-making across various industries.

Predictive analytics techniques

Predictive analytics techniques are methodologies and tools used to analyze historical data to forecast future outcomes. These techniques combine data, statistical algorithms, and machine learning models to predict trends, behaviors, or activities.

Common techniques in predictive analytics:

Linear regression:

Linear regression is a straightforward method to predict a continuous dependent variable based on one or more independent variables. It fits a linear equation to the observed data, essentially finding the "line of best fit.", which can then be used to predict future values.

One of the key strengths of linear regression is its interpretability. The resulting model is easy to understand, and the coefficients provide clear insights into the relationships between the variables. For example, in a model predicting house prices, the coefficients would reveal how much each factor (like size or location) contributes to the overall price.

Linear regression performs best when there's a clear linear relationship between the variables, which means that a change in one variable results in a proportional change in the other.

Logistic regression:

Logistic regression is a statistical method used for predicting the probability of a categorical dependent variable, which typically involves a binary outcome – something that can be either "yes" or "no", "true" or "false", or a similar two-category situation.

Source: Canley

Source: Canley

Instead of predicting a specific value, it estimates the probability of an outcome.

Here's how it works: logistic regression analyzes the relationship between the independent variables (the factors that might influence the outcome) and the log odds of the outcome. It then uses this relationship to calculate a probability score.

A key advantage of logistic regression is that its results are relatively easy to interpret. The coefficients produced by the model can be understood as odds ratios, indicating how much a change in an independent variable affects the odds of the outcome occurring.

Decision trees:

Decision trees use a tree-like structure to make predictions. Think of it like a flowchart: you start at the top with a question, and the answer leads you down different branches until you reach a final decision.

In a decision tree, each node represents a feature (a piece of information), each branch represents a decision rule based on that feature, and each leaf node at the end represents the final outcome or prediction.

This structure makes decision trees easy to understand and explain. Even for non-technical people, you can clearly see the model's path to its prediction. They're also versatile and can handle categorical data (like colors or types) and numerical data (like numbers). Another advantage is that they don't make assumptions about the underlying data distribution.

Decision trees are used in a wide variety of applications. For example, banks might use them to assess credit risk by evaluating factors like income and credit score. However, decision trees have some limitations. They can sometimes become overly complex and fail to generalize well to new data. They can also be unstable, meaning small changes in the data can sometimes lead to very different tree structures.

Time series analytics:

Time series analytics is a specialized method that focuses on understanding and predicting data that changes over time. Imagine tracking the daily visits to a website, the monthly sales of a product, or the yearly fluctuations in temperature. These are all examples of time series data, where the order of the data points matters because they're connected through time.

Time series analytics goes beyond simply looking at individual data points. It delves into the patterns and trends hidden within the sequence of data. By identifying trends (long-term upward or downward movements), seasonality (recurring patterns at regular intervals), and cyclical patterns (fluctuations that don't have a fixed frequency), we can gain valuable insights.

For example, analyzing website traffic as a time series allows us to see not just how many visitors there are but also how that number changes over time. Are there more visitors on weekends? Is there a seasonal peak during holidays? These insights can inform strategies for optimizing website content and marketing campaigns.

Clustering:

Clustering is a technique used to discover hidden structures and patterns in data by grouping similar data points together. Imagine it like organizing a messy room: you take a jumble of items and sort them into distinct piles based on their characteristics.

Source: Chire

Source: Chire

The process of grouping helps reveal underlying relationships that might not be obvious at first glance. For example, imagine a retailer analyzing their customer data. By clustering customers based on their purchase history, demographics, and browsing behavior, they might discover distinct groups like "budget-conscious shoppers," "tech enthusiasts," or "new parents." These insights can then be used to personalize marketing campaigns, recommend products, and improve customer satisfaction.

Neural networks (basic applications):

Neural networks are a fascinating area of machine learning inspired by the structure and function of the human brain. These complex models are designed to learn intricate patterns and relationships in data, making them capable of tackling some of the most challenging tasks in artificial intelligence.

Imagine a network of interconnected nodes, each processing and passing information to the next, much like neurons in the brain. This structure allows neural networks to learn from vast amounts of data, gradually adjusting the connections between nodes to improve their performance.

While neural networks achieve impressive results, they also have some drawbacks. One is their "black box" nature, which means that it can be difficult to understand exactly how a neural network arrives at its decisions, making it challenging to interpret its internal workings. They also typically require massive amounts of data to train effectively and can be computationally expensive to train and deploy.

To learn more about neural networks, read: What is a neural network?

Other advanced techniques

In addition to the techniques covered in this section, there are many other advanced techniques that can be used for predictive analytics. These techniques include:

- Support vector machines (SVMs): SVMs are classification algorithms that can be used to predict categorical outcomes. They are effective when dealing with complex datasets with many features.

- Random forests: Random forests are an ensemble learning method that combines multiple decision trees to improve accuracy and reduce overfitting.

- Bayesian networks: Bayesian networks are a probabilistic graphical model that can be used to represent the relationships between different variables. They are particularly useful for making predictions in the presence of uncertainty.

- Genetic algorithms: Genetic algorithms are search algorithms that find optimal solutions to complex problems. They are often used in predictive analytics to optimize the parameters of machine learning models.

- Neural networks (advanced applications): As mentioned earlier, neural networks are a powerful tool for predictive analytics. However, there are many different types of neural networks, each with its own strengths and weaknesses. Some common types of neural networks include convolutional neural networks (CNNs), recurrent neural networks (RNNs), and feedforward networks networks.

How predictive analytics works

Predictive analytics is a systematic process that transforms raw data into actionable insights. Here's a breakdown of the key steps involved:

Step 1: Defining the objective

It all starts with a clear question. What are you trying to predict? Are you trying to forecast customer churn, anticipate equipment failure, or identify fraudulent transactions? Defining the specific objective is crucial for selecting the right data and techniques.

Step 2: Data collection

The foundation of any predictive model is data. This could involve gathering historical records, real-time data streams, or external sources like social media trends. The data must be relevant to the problem and of sufficient quantity and quality to ensure reliable predictions.

Source: Paper

Source: Paper

Step 3: Data preprocessing

Raw data is rarely ready for analytics. This step involves cleaning the data (handling missing values, removing errors), transforming it into a suitable format, and selecting the relevant features for the model.

Step 4: Selecting a predictive model

Choosing the right predictive model depends on the nature of the problem and the type of data. As discussed earlier, options include linear regression, logistic regression, decision trees, neural networks, and many others.

Step 5: Training and validation

This involves feeding the historical data to the chosen model and allowing it to learn the underlying patterns and relationships. The model adjusts its internal parameters to minimize errors and improve accuracy.

Step 6: Model evaluation

Before deploying a model, evaluating its performance on a separate dataset that wasn't used for training is essential, as it helps assess how well the model generalizes to new, unseen data.

Step 7: Deployment and monitoring

Once the model is deemed satisfactory, it can be deployed to make predictions on new data. Continuous monitoring is crucial to track the model's performance over time and retrain it as needed to maintain accuracy.

Step 8: Iterative improvement

Predictive analytics is an ongoing process. As new data becomes available and the business environment changes, models must be refined and updated to remain relevant and accurate.

By following these steps, organizations can leverage predictive analytics to gain valuable insights, make informed decisions, and achieve a competitive edge.

Applications of predictive analytics

Predictive analytics spans multiple industries, transforming operations, customer engagement, and decision-making. Below are some prominent use cases:

1. Healthcare

Hospitals can use predictive models to forecast patient admissions, emergency room visits, and resource needs, allowing them to optimize staffing levels, bed availability, and medical supply inventory, ensuring efficient resource allocation and improved patient care.

Source: Paper

Source: Paper

For example, The Mayo Clinic's Health Insights Program uses predictive analytics to identify patients at risk of developing chronic diseases like diabetes and cardiovascular issues. By analyzing electronic health records, genetic information, and lifestyle data, the program develops risk scores for patients. These scores guide early interventions, such as personalized treatment plans and lifestyle adjustments, leading to better patient outcomes and reduced hospital readmissions.

2. Manufacturing:

In manufacturing, companies can use predictive analytics for various tasks. For instance, General Electric (GE) uses predictive analytics in its industrial operations to anticipate equipment failures. Through its Predix platform, GE monitors sensor data from turbines, jet engines, and other equipment in real-time.

By analyzing the data, GE predicts when machinery will likely need maintenance, allowing for repairs before failures occur. This approach minimizes downtime, reduces maintenance costs, and ensures safer operations in industries like aviation and energy.

3. Sports and entertainment

The National Basketball Association (NBA) uses predictive analytics to enhance player performance and reduce injury risks. Teams like the Golden State Warriors analyze player movement data collected through wearable devices and court sensors. By processing this data with predictive models, they can identify patterns that indicate fatigue or increased injury risk.

For example:

- Teams monitor player workloads to decide when to rest key athletes.

- Analytics help optimize player rotations during games to maintain peak performance levels and prevent overexertion.

This approach has extended players’ careers and improved team performance by ensuring athletes are in top condition during crucial games. This type of analytics is also used to analyze game strategies and predict opponents' moves, giving teams a competitive edge.

The applications of predictive analytics are virtually limitless. As data continues to increase, organizations across all sectors are finding innovative ways to use predictive analytics to gain a competitive edge.

Tools and technologies for predictive analytics

Predictive analytics relies on robust tools and technologies for data processing, modeling, and visualization. Some of the popular tools include:

1. Programming languages:

- Python: Python is arguably the most popular language for predictive analytics due to its extensive libraries for data manipulation, statistical modeling, and machine learning. Libraries like NumPy, Pandas, Scikit-learn, and TensorFlow provide powerful tools for data preprocessing, model building, and evaluation.

- R: R is another widely used language specifically designed for statistical computing and graphics. It offers a comprehensive collection of data analytics, visualization, and machine learning packages, making it easy for statisticians and data scientists to use.

- SQL: While not a dedicated data science language, SQL is important for working with relational databases, a common source of data for predictive analytics. SQL skills are crucial for data extraction, transformation, and loading (ETL) processes.

2. Data analytics platforms:

- RapidMiner: RapidMiner is a popular platform that provides a visual workflow designer for building and deploying predictive models. It offers a wide range of data preprocessing, modeling, and visualization tools, making it accessible to beginners and experienced users.

- KNIME: KNIME is another open-source platform that uses a visual workflow approach for data analytics and predictive modeling. It supports multiple data sources and algorithms, and its modular design allows easy integration with other tools.

3. Big data frameworks:

- Apache spark: Spark is an open-source framework for distributed data processing. It's designed to handle large datasets and provides tools for data manipulation, machine learning, and graph processing, making it ideal for big data predictive analytics.

- Apache Hadoop: Hadoop is another popular framework for distributed storage and processing of large datasets. Its core components, Hadoop Distributed File System (HDFS) and MapReduce, provide a scalable and reliable platform for big data analytics.

4. Business intelligence tools:

- Tableau: Tableau is a leading data visualization tool that allows users to create interactive dashboards and reports. It can connect to various data sources and provides a user-friendly interface for exploring data and gaining insights.

- Power BI: Power BI is a business analytics service from Microsoft that provides interactive visualizations and business intelligence capabilities. It allows users to connect to various data sources, build reports, and share insights across their organization.

5. Cloud platforms:

Cloud computing has changed the way businesses approach predictive analytics. Instead of investing in expensive hardware and software infrastructure, organizations can rely on the scalability and flexibility of cloud platforms to access on-demand computing resources, especially when dealing with the large datasets and complex computations often involved in predictive modeling.

Moreover, cloud platforms offer access to GPUs designed for handling complex mathematical computations needed for building predictive models, enabling faster model training and more efficient processing of large datasets.

Conclusion

Predictive analytics is a cornerstone of modern decision-making, transforming how organizations anticipate and prepare for the future. By using data-driven predictions, businesses can stay ahead of trends, minimize risks, and maximize opportunities.

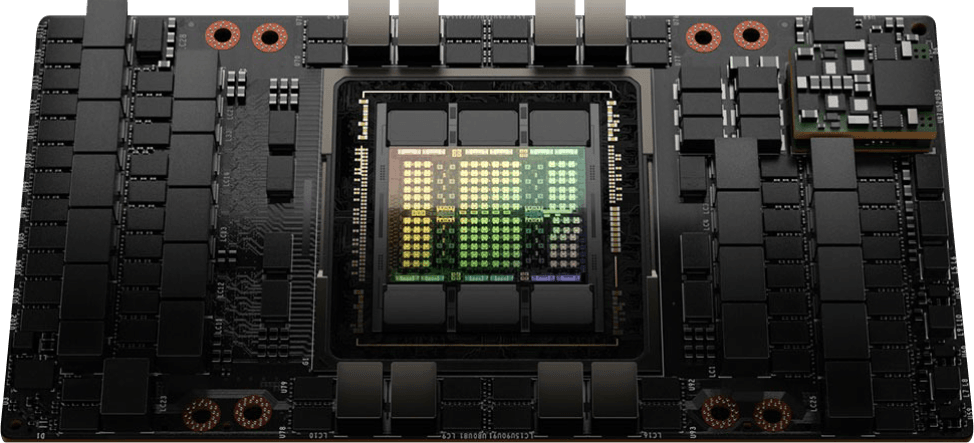

CUDO Compute is the ideal platform for developing your predictive models—access top-tier NVIDIA GPUs, like the NVIDIA H200, at affordable pricing to accelerate your model training. Click here to get started, or contact us.

Continue reading