As the need for AI infrastructure soars, access to accessible and high-performance compute gets ever more important. Open-source models like Llama and Qwen and tools for fine-tuning and inference, such as vLLM and Axolotl, make it easier for organizations to adapt AI to their specific use cases.

However, moving from experiments to production presents challenges, especially in efficiently managing and automating AI workloads. Containers play a key role here, making managing dependencies, reusing code, and efficiently scaling workloads easier.

While mature, traditional tools like Kubernetes and Terraform often add unnecessary complexity for AI teams seeking to avoid heavy DevOps setups. Using dstack, a lightweight open-source alternative to Kubernetes and Slurm, simplifies AI infrastructure management without complex MLOps setups.

Having been collaborating closely with the dstack’s team, we are happy to announce that dstack can now be natively used on CUDO Compute. With dstack on CUDO Compute, developers can provision instances, set up dev environments, automate task scheduling, and deploy AI models.

dstack’s functionality complements CUDO Compute, letting developers manage AI models’ training and deployment in a streamlined way that reduces MLOps burden.

Key Benefits of Using dstack on CUDO Compute

- Simplified AI infrastructure management: dstack eliminates the need for complex MLOps setups, making it easier for AI teams to manage their infrastructure.

- Streamlined AI development and deployment: dstack provides a unified platform for managing AI workloads from development to deployment.

- Increased efficiency and productivity: dstack automates tasks such as provisioning, scaling, and scheduling, freeing up developers to focus on more strategic work.

- Improved developer experience: dstack provides a user-friendly interface and CLI, making it easy for developers to work with AI infrastructure.

Getting Started with dstack on CUDO Compute

dstack is available as a self-hosted open-source version and a hosted marketplace version.

To use your CUDO Compute account with dstack, create an API key in the CUDO web console and specify it in ~/.dstack/server/config.yml file:

projects:

- name: main

backends:

- type: cudo

project_id: my-cudo-project

creds:

type: api_key

api_key: 7487240a466624b48de22865589

To use the self-hosted version of dstack, install dstack it via pip (Docker is also available) and start the server:

pip install "dstack[all]" -U

dstack server

Now you can create a project directory and initialize it for use with dstack:

mkdir quickstart && cd quickstart

dstack init

If you want to set up a dev environment, create a .dstack.yml inside your project directory to specify its configuration:

type: dev-environment

# The name is optional, if not specified, generated randomly

name: vscode

python: '3.11'

# Uncomment to use a custom Docker image

# image: dstackai/base:py3.13-0.6-cuda-12.1

ide: vscode

# Use either spot or on-demand instances

spot_policy: auto

# Uncomment to request resources

# resources:

# gpu: 24GB

Now apply the dstack configuration:

dstack apply -f .dstack.yml

dstack will automatically provision cloud instances and set up a dev environment according to your configuration so you can access it using your desktop IDE.

Apart from dev environments, dstack supports other abstractions, such as tasks (for training or data processing) and services (for deploying models).

Here’s an example of how you can define a model endpoint configuration:

type: service

# The name is optional, if not specified, generated randomly

name: llama31-service

python: "3.10"

commands:

- pip install vllm

- vllm serve meta-llama/Meta-Llama-3.1-8B-Instruct --max-model-len 4096

port: 8000

resources:

gpu: 24GB

model: meta-llama/Meta-Llama-3.1-8B-Instruct

After running dstack apply, dstack provisions cloud instances and sets up a ready-to-use endpoint compatible with OpenAI's API, with built-in authentication and auto-scales it according to the load.

Additionally, in dstack’s dashboard, you can access the deployed model via a playground and its endpoint.

Using dstack on CUDO Compute, individuals and teams can manage containerized AI workloads, share resources, and automate setup without complex configurations. Installation requires only the open-source version of dstack, and its configuration easily scales for team use.

For more on configuring dstack, see the official dstack documentation. Together, CUDO Compute and dstack provide accessible high-performance compute and developer-friendly tools for AI development and deployment.

We aim to make CUDO Compute highly compatible with open-source tools, and we look forward to any feedback from developers to continue improving developer experience.

Using dstack on CUDO Compute, individuals and teams can manage containerized AI workloads, share resources, and automate setup without complex configurations. Installation requires only the open-source version of dstack, and its configuration easily scales for team use.

For more on configuring dstack, see the official dstack documentation. Together, CUDO Compute and dstack provide accessible high-performance compute and developer-friendly tools for AI development and deployment.

We aim to make CUDO Compute highly compatible with open-source tools, and we look forward to any feedback from developers to continue improving developer experience.

Learn more: LinkedIn , Twitter , YouTube , Get in touch .

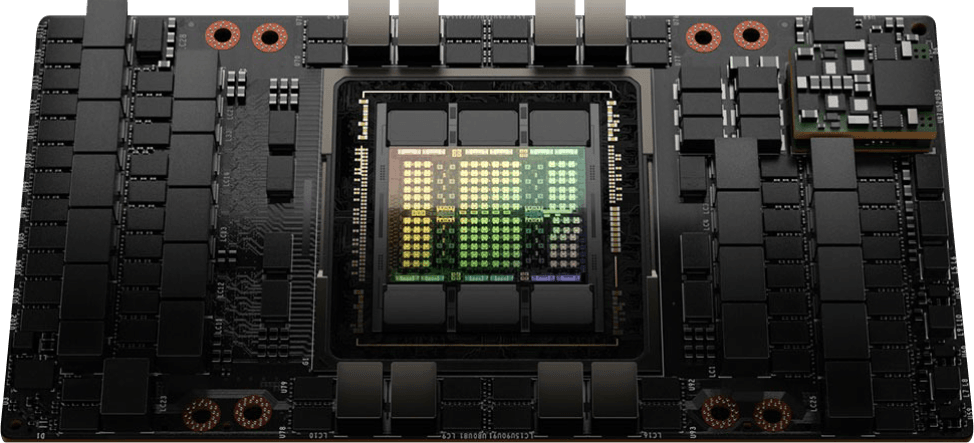

Starting from $2.15/hr

NVIDIA H100's are now available on-demand

A cost-effective option for AI, VFX and HPC workloads. Prices starting from $2.15/hr

Subscribe to our Newsletter

Subscribe to the CUDO Compute Newsletter to get the latest product news, updates and insights.