Building advanced artificial intelligence (AI) systems, such as large language models (LLMs) and deep learning networks, requires immense processing power as their complexity grows. GPU servers provide the necessary computational power to fuel these advancements in AI. But what makes GPUs so well-suited for this task? The answer is in the fundamental differences between CPUs and GPUs.

While Central Processing Units (CPUs) and Graphics Processing Units (GPUs) are processors, their architectures differ significantly. CPUs excel at sequential processing, making them ideal for running operating systems and handling individual tasks efficiently. However, their sequential approach becomes problematic when dealing with the massive datasets and complex calculations required for training AI models.

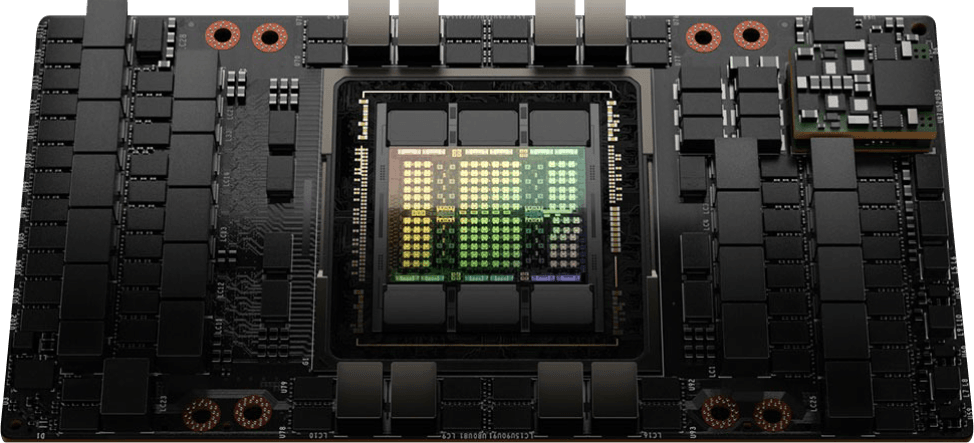

This is why GPUs are used in AI development. Originally designed for video game graphics, GPUs have been repurposed and become pivotal due to their parallel processing architecture. Unlike CPUs, GPUs can handle thousands of tasks simultaneously, making them ideal for accelerating the development of complex AI algorithms.

GPU servers have made AI development easier, reducing training times and enabling the creation of previously unimaginable AI models. This article will delve into everything you need to know about GPU servers for AI. We'll explore their components, applications, challenges, and benefits, equipping you with the knowledge to use them in your AI projects.

What are GPU servers?

GPU servers are dedicated computing systems built to speed up processing tasks that require parallel data computation. They can be used for AI, deep learning, and graphics-intensive tasks. Unlike traditional CPU servers, GPU servers integrate one or more GPUs to significantly enhance performance for specific computational tasks.

Here is how they differ from traditional CPU servers:

Architecture and Features:

The architecture of a GPU server is different from conventional servers. As stated earlier, a CPU consists of a few cores optimized for sequential serial processing. A GPU has thousands of smaller, more efficient cores designed for multi-threaded, parallel processing. Imagine it like a factory assembly line: CPUs are like single workers handling each task individually, while GPUs are multiple workers tackling different parts of the process simultaneously. This parallel processing architecture makes GPUs ideal for algorithms that break down large tasks into smaller, independent units, like those found in machine learning and complex simulations.

Furthermore, GPU servers come equipped with high-bandwidth memory (HBM), which is significantly faster than traditional servers' standard memory. This enhanced memory speed helps when developing AI and deep learning algorithms, where the speed at which data can be fed into and processed by the GPU can determine the overall performance.

What is a GPU server?

A GPU server is a computer specifically designed for demanding tasks like AI and machine learning. It combines a traditional CPU with one or more powerful graphics processing units (GPUs) for faster processing of complex calculations.

GPU servers can be categorized based on their intended use and the scale of the tasks they're designed for. Here are some of them:

Categories of GPU servers:

These are some categories of GPU servers:

Single-GPU Servers: These are the most basic type of GPU servers equipped with a single Graphics card. They suit small-scale projects, research and development purposes, and entry-level deep learning applications. They offer a cost-effective solution for users who require GPU acceleration but do not need the power provided by multiple GPUs.

Multi-GPU Servers: Designed to accommodate multiple GPUs within a single server chassis, these servers offer enhanced performance by combining multiple GPUs. They are ideal for high-performance computing and large-scale deep learning.

Cloud-Based GPU Servers: These servers have gained popularity due to their scalability, flexibility, and ease of use. Cloud service providers offer GPU instances on demand, allowing users to access powerful GPU resources without investing in physical hardware. They are widely used for large-scale data processing, AI training, and inference tasks.

Here is how Cloud GPU servers compare with hardware GPU servers:

| Feature | Cloud GPU Servers | Hardware GPU Servers |

|---|---|---|

| Cost | Lower upfront cost, pay-as-you-go model | Higher upfront cost, ongoing maintenance costs |

| Scalability | Easily scale resources up or down on demand | Limited scalability, requires manual hardware upgrades |

| Maintenance | Managed by the cloud provider, less IT burden | Requires in-house IT expertise for maintenance and troubleshooting |

| Control & Customization | Limited control over hardware and software configuration | Full control over hardware and software configuration |

| Security | Shared infrastructure, potential security concerns | Dedicated hardware, greater control over security |

| Latency | Potential for network latency impacting performance | Lower latency for local processing |

| Accessibility | Accessible from anywhere with an internet connection | Limited to the physical location of the hardware |

There are many more categories of GPU servers. Each type caters to different needs, from individual developers working on AI projects to large enterprises processing vast amounts of data across multiple global locations.

How GPU servers support AI development

Building, training, and deploying AI and machine learning models using GPU servers are now easier because the ecosystem around GPU servers for AI development has matured. There are various libraries and frameworks optimized for GPUs when developing AI models.

Frameworks such as TensorFlow, PyTorch, and CUDA have been developed specifically to leverage GPU capabilities, making it easier for developers to build, train, and deploy AI models using GPU servers.

NVIDIA, one of the leading producers of GPUs, provides a suite of developer tools and libraries like CUDA-X AI, including performance-optimized libraries to accelerate AI development. The NVIDIA AI toolkit offers libraries and tools to start from pre-trained models for transfer learning and fine-tuning, which helps maximize the performance and accuracy of AI applications.

AI Models and Algorithms Enhanced by GPUs:

GPUs are particularly effective for training deep learning models, such as Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs), which are widely used in applications ranging from image and speech recognition to natural language processing.

In addition to deep learning, GPUs can also speed up older machine learning algorithms, such as support vector machines (SVMs) and k-means clustering, by facilitating faster data processing and analysis. This is because they process data much faster, allowing you to experiment more and build and refine models faster.

Using GPU servers in AI means integrating them into your workflow, choosing the right models and algorithms to benefit from GPU acceleration, and selecting compatible software frameworks. By following these steps, you can unlock new levels of performance and efficiency in your AI projects.

Choosing the Right GPU Server for AI Tasks

Selecting the best GPU server for your AI projects can boost the efficiency of developing your AI applications. Here are key factors and considerations to guide you in choosing the right GPU server for your specific AI needs:

Performance Requirements:

The choice of a GPU server should start with understanding your AI application's performance requirements. Consider the complexity of the AI models you intend to train, the size of your datasets, and the expected inference speed.

Memory Capacity:

GPU memory determines how much data can be processed simultaneously. Larger memory capacities allow for larger batches of data to be processed at once, leading to faster training times and more efficient data handling. Ensure the GPU server you choose has sufficient memory to accommodate your models and datasets.

Power Consumption and Cooling Requirements:

GPU servers can consume significant power and generate considerable heat. Assess different GPU servers' power consumption and cooling requirements to ensure they align with your operational capabilities and environmental considerations. Energy-efficient GPUs and well-designed cooling systems can reduce operational costs and extend the lifespan of the hardware.

Budget Constraints:

Higher-end GPU servers offer superior performance but are expensive. Balance your performance needs with your budget, considering the initial purchase price and the long-term operational costs, including energy consumption and maintenance.

Scalability and Future-proofing:

Consider the scalability of the GPU server, especially if you anticipate growing your AI operations. Choosing a server that can be easily upgraded or integrated into a larger cluster can save time and resources in the long run. Additionally, opting for servers that support the latest GPU architectures and technologies can help future-proof your investment.

To use a scalable GPU server without the need for an upfront investment in expensive GPU server hardware, use CUDO Compute cloud GPU servers. CUDO Compute has reserved GPUs that come pre-installed with PyTorch, TensorFlow, and MLFlow, and you only pay for what you use when you use it. Start building now!

Setting Up and Optimizing GPU Servers for AI

Setting up and optimizing GPU servers for AI applications involves several critical steps to ensure they deliver the best possible performance. Below is a guide to help you efficiently set up your GPU server and optimize it for AI processing tasks.

Can GPUs be used for AI?

Yes, GPUs are highly effective for AI because they handle parallel processing efficiently. This is crucial for training AI models, which simultaneously process massive amounts of data. GPUs significantly accelerate training times, enabling faster development and iteration in AI projects.

Initial Setup and Configuration:

- Hardware Assembly: Begin by securely installing the GPU cards into the server. Ensure proper seating in the PCIe slots and adequate power supply connections.

- System Software Installation: Install the operating system (OS) that supports your AI applications and the GPU hardware. Linux distributions such as Ubuntu are commonly used due to their strong support for AI and machine learning environments.

- Drivers and CUDA Toolkit: Install the latest GPU drivers and the CUDA toolkit from NVIDIA (or corresponding tools for other GPU brands). These are essential for enabling the GPU’s capabilities and for development with AI frameworks.

- AI Frameworks and Libraries: Install AI frameworks such as TensorFlow, PyTorch, or Caffe, which are optimized for GPU usage.

Performance Optimization:

- Maximizing GPU Utilization: Monitor GPU utilization using tools like NVIDIA’s nvidia-smi to ensure that AI models fully utilize the GPU’s capabilities. Adjust model parameters or batch sizes to optimize GPU workload.

- Memory Management: Efficiently manage GPU memory to prevent bottlenecks. Use techniques like mixed precision training and memory pooling to reduce memory consumption without compromising performance.

- Thermal Management: Maintain optimal cooling to prevent thermal throttling, which can reduce GPU performance. Ensure proper airflow and consider additional cooling solutions if necessary.

- Parallel Processing: Utilize the parallel processing capabilities of GPUs by distributing tasks across multiple GPUs, if available. Frameworks like TensorFlow and PyTorch support multi-GPU setups, enabling faster processing and model training.

- Software Optimization: Regularly update AI frameworks, libraries, and drivers to their latest versions to take advantage of performance improvements and bug fixes. Utilize GPU-optimized algorithms and functions within AI frameworks to enhance performance.

Regular Monitoring and Maintenance:

Maintain the GPU server's health by monitoring system performance, temperature, and power usage. Schedule regular maintenance checks to ensure hardware components function correctly and update software as needed.

Properly setting up and optimizing GPU servers can significantly enhance AI application performance. Following these guidelines ensures that your GPU server is well-equipped to handle demanding AI tasks, providing faster insights and more efficient operations.

The demand for high-performance computing solutions like GPU servers will only grow as AI evolves. Businesses, researchers, and developers can significantly enhance their AI projects by effectively utilizing, choosing, and optimizing GPU servers, leading to faster, more accurate results and groundbreaking innovations.

Whether you're looking to launch your project or to scale existing operations, using GPU servers is a step toward unlocking new possibilities in artificial intelligence.

Learn more: LinkedIn , Twitter , YouTube , Get in touch .

Starting from $2.15/hr

NVIDIA H100's are now available on-demand

A cost-effective option for AI, VFX and HPC workloads. Prices starting from $2.15/hr

Subscribe to our Newsletter

Subscribe to the CUDO Compute Newsletter to get the latest product news, updates and insights.